Обсуждение: kqueue

Hi, On the WaitEventSet thread I posted a small patch to add kqueue support[1]. Since then I peeked at how some other software[2] interacts with kqueue and discovered that there are platforms including NetBSD where kevent.udata is an intptr_t instead of a void *. Here's a version which should compile there. Would any NetBSD user be interested in testing this? (An alternative would be to make configure to test for this with some kind of AC_COMPILE_IFELSE incantation but the steamroller cast is simpler.) [1] http://www.postgresql.org/message-id/CAEepm=1dZ_mC+V3YtB79zf27280nign8MKOLxy2FKhvc1RzN=g@mail.gmail.com [2] https://github.com/libevent/libevent/commit/5602e451ce872d7d60c640590113c5a81c3fc389 -- Thomas Munro http://www.enterprisedb.com

Вложения

On Tue, Mar 29, 2016 at 7:53 PM, Thomas Munro <thomas.munro@enterprisedb.com> wrote: > On the WaitEventSet thread I posted a small patch to add kqueue > support[1]. Since then I peeked at how some other software[2] > interacts with kqueue and discovered that there are platforms > including NetBSD where kevent.udata is an intptr_t instead of a void > *. Here's a version which should compile there. Would any NetBSD > user be interested in testing this? (An alternative would be to make > configure to test for this with some kind of AC_COMPILE_IFELSE > incantation but the steamroller cast is simpler.) Did you code this up blind or do you have a NetBSD machine yourself? -- Robert Haas EnterpriseDB: http://www.enterprisedb.com The Enterprise PostgreSQL Company

On 2016-04-21 14:15:53 -0400, Robert Haas wrote: > On Tue, Mar 29, 2016 at 7:53 PM, Thomas Munro > <thomas.munro@enterprisedb.com> wrote: > > On the WaitEventSet thread I posted a small patch to add kqueue > > support[1]. Since then I peeked at how some other software[2] > > interacts with kqueue and discovered that there are platforms > > including NetBSD where kevent.udata is an intptr_t instead of a void > > *. Here's a version which should compile there. Would any NetBSD > > user be interested in testing this? (An alternative would be to make > > configure to test for this with some kind of AC_COMPILE_IFELSE > > incantation but the steamroller cast is simpler.) > > Did you code this up blind or do you have a NetBSD machine yourself? RMT, what do you think, should we try to get this into 9.6? It's feasible that the performance problem 98a64d0bd713c addressed is also present on free/netbsd. - Andres

On Thu, Apr 21, 2016 at 2:22 PM, Andres Freund <andres@anarazel.de> wrote: > On 2016-04-21 14:15:53 -0400, Robert Haas wrote: >> On Tue, Mar 29, 2016 at 7:53 PM, Thomas Munro >> <thomas.munro@enterprisedb.com> wrote: >> > On the WaitEventSet thread I posted a small patch to add kqueue >> > support[1]. Since then I peeked at how some other software[2] >> > interacts with kqueue and discovered that there are platforms >> > including NetBSD where kevent.udata is an intptr_t instead of a void >> > *. Here's a version which should compile there. Would any NetBSD >> > user be interested in testing this? (An alternative would be to make >> > configure to test for this with some kind of AC_COMPILE_IFELSE >> > incantation but the steamroller cast is simpler.) >> >> Did you code this up blind or do you have a NetBSD machine yourself? > > RMT, what do you think, should we try to get this into 9.6? It's > feasible that the performance problem 98a64d0bd713c addressed is also > present on free/netbsd. My personal opinion is that it would be a reasonable thing to do if somebody can demonstrate that it actually solves a real problem. Absent that, I don't think we should rush it in. -- Robert Haas EnterpriseDB: http://www.enterprisedb.com The Enterprise PostgreSQL Company

Robert Haas wrote: > On Thu, Apr 21, 2016 at 2:22 PM, Andres Freund <andres@anarazel.de> wrote: > > On 2016-04-21 14:15:53 -0400, Robert Haas wrote: > >> On Tue, Mar 29, 2016 at 7:53 PM, Thomas Munro > >> <thomas.munro@enterprisedb.com> wrote: > >> > On the WaitEventSet thread I posted a small patch to add kqueue > >> > support[1]. Since then I peeked at how some other software[2] > >> > interacts with kqueue and discovered that there are platforms > >> > including NetBSD where kevent.udata is an intptr_t instead of a void > >> > *. Here's a version which should compile there. Would any NetBSD > >> > user be interested in testing this? (An alternative would be to make > >> > configure to test for this with some kind of AC_COMPILE_IFELSE > >> > incantation but the steamroller cast is simpler.) > >> > >> Did you code this up blind or do you have a NetBSD machine yourself? > > > > RMT, what do you think, should we try to get this into 9.6? It's > > feasible that the performance problem 98a64d0bd713c addressed is also > > present on free/netbsd. > > My personal opinion is that it would be a reasonable thing to do if > somebody can demonstrate that it actually solves a real problem. > Absent that, I don't think we should rush it in. My first question is whether there are platforms that use kqueue on which the WaitEventSet stuff proves to be a bottleneck. I vaguely recall that MacOS X in particular doesn't scale terribly well for other reasons, and I don't know if anybody runs *BSD in large machines. On the other hand, there's plenty of hackers running their laptops on MacOS X these days, so presumably any platform dependent problem would be discovered quickly enough. As for NetBSD, it seems mostly a fringe platform, doesn't it? We would discover serious dependency problems quickly enough on the buildfarm ... except that the only netbsd buildfarm member hasn't reported in over two weeks. Am I mistaken in any of these points? (Our coverage of the BSD platforms leaves much to be desired FWIW.) -- Álvaro Herrera http://www.2ndQuadrant.com/ PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

On Thu, Apr 21, 2016 at 3:31 PM, Alvaro Herrera <alvherre@2ndquadrant.com> wrote: > Robert Haas wrote: >> On Thu, Apr 21, 2016 at 2:22 PM, Andres Freund <andres@anarazel.de> wrote: >> > On 2016-04-21 14:15:53 -0400, Robert Haas wrote: >> >> On Tue, Mar 29, 2016 at 7:53 PM, Thomas Munro >> >> <thomas.munro@enterprisedb.com> wrote: >> >> > On the WaitEventSet thread I posted a small patch to add kqueue >> >> > support[1]. Since then I peeked at how some other software[2] >> >> > interacts with kqueue and discovered that there are platforms >> >> > including NetBSD where kevent.udata is an intptr_t instead of a void >> >> > *. Here's a version which should compile there. Would any NetBSD >> >> > user be interested in testing this? (An alternative would be to make >> >> > configure to test for this with some kind of AC_COMPILE_IFELSE >> >> > incantation but the steamroller cast is simpler.) >> >> >> >> Did you code this up blind or do you have a NetBSD machine yourself? >> > >> > RMT, what do you think, should we try to get this into 9.6? It's >> > feasible that the performance problem 98a64d0bd713c addressed is also >> > present on free/netbsd. >> >> My personal opinion is that it would be a reasonable thing to do if >> somebody can demonstrate that it actually solves a real problem. >> Absent that, I don't think we should rush it in. > > My first question is whether there are platforms that use kqueue on > which the WaitEventSet stuff proves to be a bottleneck. I vaguely > recall that MacOS X in particular doesn't scale terribly well for other > reasons, and I don't know if anybody runs *BSD in large machines. > > On the other hand, there's plenty of hackers running their laptops on > MacOS X these days, so presumably any platform dependent problem would > be discovered quickly enough. As for NetBSD, it seems mostly a fringe > platform, doesn't it? We would discover serious dependency problems > quickly enough on the buildfarm ... except that the only netbsd > buildfarm member hasn't reported in over two weeks. > > Am I mistaken in any of these points? > > (Our coverage of the BSD platforms leaves much to be desired FWIW.) My impression is that the Linux problem only manifested itself on large machines. I might be wrong about that. But if that's true, then we might not see regressions on other platforms just because people aren't running those operating systems on big enough hardware. -- Robert Haas EnterpriseDB: http://www.enterprisedb.com The Enterprise PostgreSQL Company

On 2016-04-21 14:25:06 -0400, Robert Haas wrote: > On Thu, Apr 21, 2016 at 2:22 PM, Andres Freund <andres@anarazel.de> wrote: > > On 2016-04-21 14:15:53 -0400, Robert Haas wrote: > >> On Tue, Mar 29, 2016 at 7:53 PM, Thomas Munro > >> <thomas.munro@enterprisedb.com> wrote: > >> > On the WaitEventSet thread I posted a small patch to add kqueue > >> > support[1]. Since then I peeked at how some other software[2] > >> > interacts with kqueue and discovered that there are platforms > >> > including NetBSD where kevent.udata is an intptr_t instead of a void > >> > *. Here's a version which should compile there. Would any NetBSD > >> > user be interested in testing this? (An alternative would be to make > >> > configure to test for this with some kind of AC_COMPILE_IFELSE > >> > incantation but the steamroller cast is simpler.) > >> > >> Did you code this up blind or do you have a NetBSD machine yourself? > > > > RMT, what do you think, should we try to get this into 9.6? It's > > feasible that the performance problem 98a64d0bd713c addressed is also > > present on free/netbsd. > > My personal opinion is that it would be a reasonable thing to do if > somebody can demonstrate that it actually solves a real problem. > Absent that, I don't think we should rush it in. On linux you needed a 2 socket machine to demonstrate the problem, but both old ones (my 2009 workstation) and new ones were sufficient. I'd be surprised if the situation on freebsd is any better, except that you might hit another scalability bottleneck earlier. I doubt there's many real postgres instances operating on bigger hardware on freebsd, with sufficient throughput to show the problem. So I think the argument for including is more along trying to be "nice" to more niche-y OSs. I really don't have any opinion either way. - Andres

On Fri, Apr 22, 2016 at 12:21 PM, Andres Freund <andres@anarazel.de> wrote: > On 2016-04-21 14:25:06 -0400, Robert Haas wrote: >> On Thu, Apr 21, 2016 at 2:22 PM, Andres Freund <andres@anarazel.de> wrote: >> > On 2016-04-21 14:15:53 -0400, Robert Haas wrote: >> >> On Tue, Mar 29, 2016 at 7:53 PM, Thomas Munro >> >> <thomas.munro@enterprisedb.com> wrote: >> >> > On the WaitEventSet thread I posted a small patch to add kqueue >> >> > support[1]. Since then I peeked at how some other software[2] >> >> > interacts with kqueue and discovered that there are platforms >> >> > including NetBSD where kevent.udata is an intptr_t instead of a void >> >> > *. Here's a version which should compile there. Would any NetBSD >> >> > user be interested in testing this? (An alternative would be to make >> >> > configure to test for this with some kind of AC_COMPILE_IFELSE >> >> > incantation but the steamroller cast is simpler.) >> >> >> >> Did you code this up blind or do you have a NetBSD machine yourself? >> > >> > RMT, what do you think, should we try to get this into 9.6? It's >> > feasible that the performance problem 98a64d0bd713c addressed is also >> > present on free/netbsd. >> >> My personal opinion is that it would be a reasonable thing to do if >> somebody can demonstrate that it actually solves a real problem. >> Absent that, I don't think we should rush it in. > > On linux you needed a 2 socket machine to demonstrate the problem, but > both old ones (my 2009 workstation) and new ones were sufficient. I'd be > surprised if the situation on freebsd is any better, except that you > might hit another scalability bottleneck earlier. > > I doubt there's many real postgres instances operating on bigger > hardware on freebsd, with sufficient throughput to show the problem. So > I think the argument for including is more along trying to be "nice" to > more niche-y OSs. What has BSD ever done for us?! (Joke...) I vote to leave this patch in the next commitfest where it is, and reconsider if someone shows up with a relevant problem report on large systems. I can't see any measurable performance difference on a 4 core laptop running FreeBSD 10.3. Maybe kqueue will make more difference even on smaller systems in future releases if we start using big wait sets for distributed/asynchronous work, in-core pooling/admission control etc. Here's a new version of the patch that fixes some stupid bugs. I have run regression tests and some basic sanity checks on OSX 10.11.4, FreeBSD 10.3, NetBSD 7.0 and OpenBSD 5.8. There is still room to make an improvement that would drop the syscall from AddWaitEventToSet and ModifyWaitEvent, compressing wait set modifications and waiting into a single syscall (kqueue's claimed advantage over the competition). While doing that I discovered that unpatched master doesn't actually build on recent NetBSD systems because our static function strtoi clashes with a non-standard libc function of the same name[1] declared in inttypes.h. Maybe we should rename it, like in the attached? [1] http://netbsd.gw.com/cgi-bin/man-cgi?strtoi++NetBSD-current -- Thomas Munro http://www.enterprisedb.com

Вложения

On 2016-04-22 20:39:27 +1200, Thomas Munro wrote: > I vote to leave this patch in the next commitfest where it is, and > reconsider if someone shows up with a relevant problem report on large > systems. Sounds good! > Here's a new version of the patch that fixes some stupid bugs. I have > run regression tests and some basic sanity checks on OSX 10.11.4, > FreeBSD 10.3, NetBSD 7.0 and OpenBSD 5.8. There is still room to make > an improvement that would drop the syscall from AddWaitEventToSet and > ModifyWaitEvent, compressing wait set modifications and waiting into a > single syscall (kqueue's claimed advantage over the competition). I find that not to be particularly interesting, and would rather want to avoid adding complexity for it. > While doing that I discovered that unpatched master doesn't actually > build on recent NetBSD systems because our static function strtoi > clashes with a non-standard libc function of the same name[1] declared > in inttypes.h. Maybe we should rename it, like in the attached? Yuck. That's a new function they introduced? That code hasn't changed in a while.... Andres

On Sat, Apr 23, 2016 at 4:36 AM, Andres Freund <andres@anarazel.de> wrote: > On 2016-04-22 20:39:27 +1200, Thomas Munro wrote: >> While doing that I discovered that unpatched master doesn't actually >> build on recent NetBSD systems because our static function strtoi >> clashes with a non-standard libc function of the same name[1] declared >> in inttypes.h. Maybe we should rename it, like in the attached? > > Yuck. That's a new function they introduced? That code hasn't changed in > a while.... Yes, according to the man page it appeared in NetBSD 7.0. That was released in September 2015, and our buildfarm has only NetBSD 5.x systems. I see that the maintainers of the NetBSD pg package deal with this with a preprocessor kludge: http://cvsweb.netbsd.org/bsdweb.cgi/pkgsrc/databases/postgresql95/patches/patch-src_backend_utils_adt_datetime.c?rev=1.1 What is the policy for that kind of thing -- do nothing until someone cares enough about the platform to supply a buildfarm animal? -- Thomas Munro http://www.enterprisedb.com

Thomas Munro wrote: > On Sat, Apr 23, 2016 at 4:36 AM, Andres Freund <andres@anarazel.de> wrote: > > On 2016-04-22 20:39:27 +1200, Thomas Munro wrote: > >> While doing that I discovered that unpatched master doesn't actually > >> build on recent NetBSD systems because our static function strtoi > >> clashes with a non-standard libc function of the same name[1] declared > >> in inttypes.h. Maybe we should rename it, like in the attached? > > > > Yuck. That's a new function they introduced? That code hasn't changed in > > a while.... > > Yes, according to the man page it appeared in NetBSD 7.0. That was > released in September 2015, and our buildfarm has only NetBSD 5.x > systems. I see that the maintainers of the NetBSD pg package deal > with this with a preprocessor kludge: > > http://cvsweb.netbsd.org/bsdweb.cgi/pkgsrc/databases/postgresql95/patches/patch-src_backend_utils_adt_datetime.c?rev=1.1 > > What is the policy for that kind of thing -- do nothing until someone > cares enough about the platform to supply a buildfarm animal? Well, if the platform is truly alive, we would have gotten complaints already. Since we haven't, maybe nobody cares, so why should we? I would rename our function nonetheless FWIW; the name seems far too generic to me. pg_strtoi? -- Álvaro Herrera http://www.2ndQuadrant.com/ PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

On 2016-04-23 10:12:12 +1200, Thomas Munro wrote: > What is the policy for that kind of thing -- do nothing until someone > cares enough about the platform to supply a buildfarm animal? I think we should fix it, I just want to make sure we understand why the error is appearing now. Since we now do... - Andres

On 2016-04-22 19:25:06 -0300, Alvaro Herrera wrote: > Since we haven't, maybe nobody cares, so why should we? I guess it's to a good degree because netbsd has pg packages, and it's fixed there? > would rename our function nonetheless FWIW; the name seems far too > generic to me. Yea. > pg_strtoi? I think that's what Thomas did upthread. Are you taking this one then? Greetings, Andres Freund

Thomas Munro <thomas.munro@enterprisedb.com> writes: > On Sat, Apr 23, 2016 at 4:36 AM, Andres Freund <andres@anarazel.de> wrote: >> On 2016-04-22 20:39:27 +1200, Thomas Munro wrote: >>> While doing that I discovered that unpatched master doesn't actually >>> build on recent NetBSD systems because our static function strtoi >>> clashes with a non-standard libc function of the same name[1] declared >>> in inttypes.h. Maybe we should rename it, like in the attached? >> Yuck. That's a new function they introduced? That code hasn't changed in >> a while.... > Yes, according to the man page it appeared in NetBSD 7.0. That was > released in September 2015, and our buildfarm has only NetBSD 5.x > systems. I see that the maintainers of the NetBSD pg package deal > with this with a preprocessor kludge: > http://cvsweb.netbsd.org/bsdweb.cgi/pkgsrc/databases/postgresql95/patches/patch-src_backend_utils_adt_datetime.c?rev=1.1 > What is the policy for that kind of thing -- do nothing until someone > cares enough about the platform to supply a buildfarm animal? There's no set policy, but certainly a promise to put up a buildfarm animal would establish that somebody actually cares about keeping Postgres running on the platform. Without one, we might fix a specific problem when reported, but we'd have no way to know about new problems. Rooting through that patches directory reveals quite a number of random-looking patches, most of which we certainly wouldn't take without a lot more than zero explanation. It's hard to tell which are actually needed, but at least some don't seem to have anything to do with building for NetBSD. regards, tom lane

Andres Freund <andres@anarazel.de> writes:

>> pg_strtoi?

> I think that's what Thomas did upthread. Are you taking this one then?

I'd go with just "strtoint". We have "strtoint64" elsewhere.

regards, tom lane

Tom Lane wrote: > Andres Freund <andres@anarazel.de> writes: > >> pg_strtoi? > > > I think that's what Thomas did upthread. Are you taking this one then? > > I'd go with just "strtoint". We have "strtoint64" elsewhere. For closure of this subthread: this rename was committed by Tom as 0ab3595e5bb5. -- Álvaro Herrera http://www.2ndQuadrant.com/ PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

On Fri, Jun 3, 2016 at 4:02 AM, Alvaro Herrera <alvherre@2ndquadrant.com> wrote: > Tom Lane wrote: >> Andres Freund <andres@anarazel.de> writes: >> >> pg_strtoi? >> >> > I think that's what Thomas did upthread. Are you taking this one then? >> >> I'd go with just "strtoint". We have "strtoint64" elsewhere. > > For closure of this subthread: this rename was committed by Tom as > 0ab3595e5bb5. Thanks. And here is a new version of the kqueue patch. The previous version doesn't apply on top of recent commit a3b30763cc8686f5b4cd121ef0bf510c1533ac22, which sprinkled some MAXALIGN macros nearby. I've now done the same thing with the kevent struct because it's cheap, uniform with the other cases and could matter on some platforms for the same reason. It's in the September commitfest here: https://commitfest.postgresql.org/10/597/ -- Thomas Munro http://www.enterprisedb.com

Вложения

On 2016-06-03 01:45, Thomas Munro wrote: > On Fri, Jun 3, 2016 at 4:02 AM, Alvaro Herrera <alvherre@2ndquadrant.com> wrote: >> Tom Lane wrote: >>> Andres Freund <andres@anarazel.de> writes: >>>>> pg_strtoi? >>> >>>> I think that's what Thomas did upthread. Are you taking this one then? >>> >>> I'd go with just "strtoint". We have "strtoint64" elsewhere. >> >> For closure of this subthread: this rename was committed by Tom as >> 0ab3595e5bb5. > > Thanks. And here is a new version of the kqueue patch. The previous > version doesn't apply on top of recent commit > a3b30763cc8686f5b4cd121ef0bf510c1533ac22, which sprinkled some > MAXALIGN macros nearby. I've now done the same thing with the kevent > struct because it's cheap, uniform with the other cases and could > matter on some platforms for the same reason. I've tested and reviewed this, and it looks good to me, other than this part: + /* + * kevent guarantees that the change list has been processed in the EINTR + * case. Here we are only applying a change list so EINTR counts as + * success. + */ this doesn't seem to be guaranteed on old versions of FreeBSD or any other BSD flavors, so I don't think it's a good idea to bake the assumption into this code. Or what do you think? .m

On Wed, Sep 7, 2016 at 12:32 AM, Marko Tiikkaja <marko@joh.to> wrote: > I've tested and reviewed this, and it looks good to me, other than this > part: > > + /* > + * kevent guarantees that the change list has been processed in the > EINTR > + * case. Here we are only applying a change list so EINTR counts as > + * success. > + */ > > this doesn't seem to be guaranteed on old versions of FreeBSD or any other > BSD flavors, so I don't think it's a good idea to bake the assumption into > this code. Or what do you think? Thanks for the testing and review! Hmm. Well spotted. I wrote that because the man page from FreeBSD 10.3 says: When kevent() call fails with EINTR error, all changes in the changelist have been applied. This sentence is indeed missing from the OpenBSD, NetBSD and OSX man pages. It was introduced by FreeBSD commit r280818[1] which made kevent a Pthread cancellation point. I investigated whether it is also true in older FreeBSD and the rest of the BSD family. I believe the answer is yes. 1. That commit doesn't do anything that would change the situation: it just adds thread cancellation wrapper code to libc and libthr which exits under certain conditions but otherwise lets EINTR through to the caller. So I think the new sentence is documentation of the existing behaviour of the syscall. 2. I looked at the code in FreeBSD 4.1[2] (the original kqueue implementation from which all others derive) and the four modern OSes[3][4][5][6]. They vary a bit but in all cases, the first place that can produce EINTR appears to be in kqueue_scan when the (variously named) kernel sleep routine is invoked, which can return EINTR or ERESTART (later translated to EINTR because kevent doesn't support restarting). That comes after all changes have been applied. In fact it's unreachable if nevents is 0: OSX doesn't call kqueue_scan in that case, and the others return early from kqueue_scan in that case. 3. An old email[7] from Jonathan Lemon (creator of kqueue) seems to support that at least in respect of ancient FreeBSD. He wrote: "Technically, an EINTR is returned when a signal interrupts the process after it goes to sleep (that is, after it calls tsleep). So if (as an example) you call kevent() with a zero valued timespec, you'll never get EINTR, since there's no possibility of it sleeping." So if I've understood correctly, what I wrote in the v4 patch is universally true, but it's also moot in this case: kevent cannot fail with errno == EINTR because nevents == 0. On that basis, here is a new version with the comment and special case for EINTR removed. [1] https://svnweb.freebsd.org/base?view=revision&revision=280818 [2] https://github.com/freebsd/freebsd/blob/release/4.1.0/sys/kern/kern_event.c [3] https://github.com/freebsd/freebsd/blob/master/sys/kern/kern_event.c [4] https://github.com/IIJ-NetBSD/netbsd-src/blob/master/sys/kern/kern_event.c [5] https://github.com/openbsd/src/blob/master/sys/kern/kern_event.c [6] https://github.com/opensource-apple/xnu/blob/master/bsd/kern/kern_event.c [7] http://marc.info/?l=freebsd-arch&m=98147346707952&w=2 -- Thomas Munro http://www.enterprisedb.com

Вложения

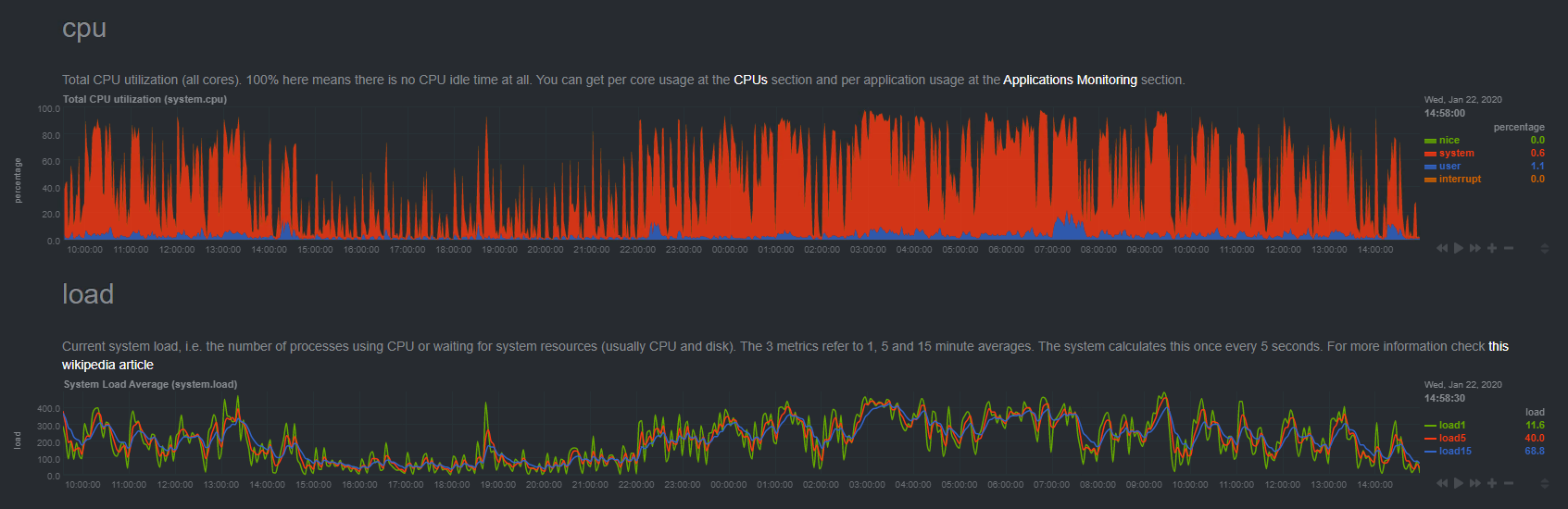

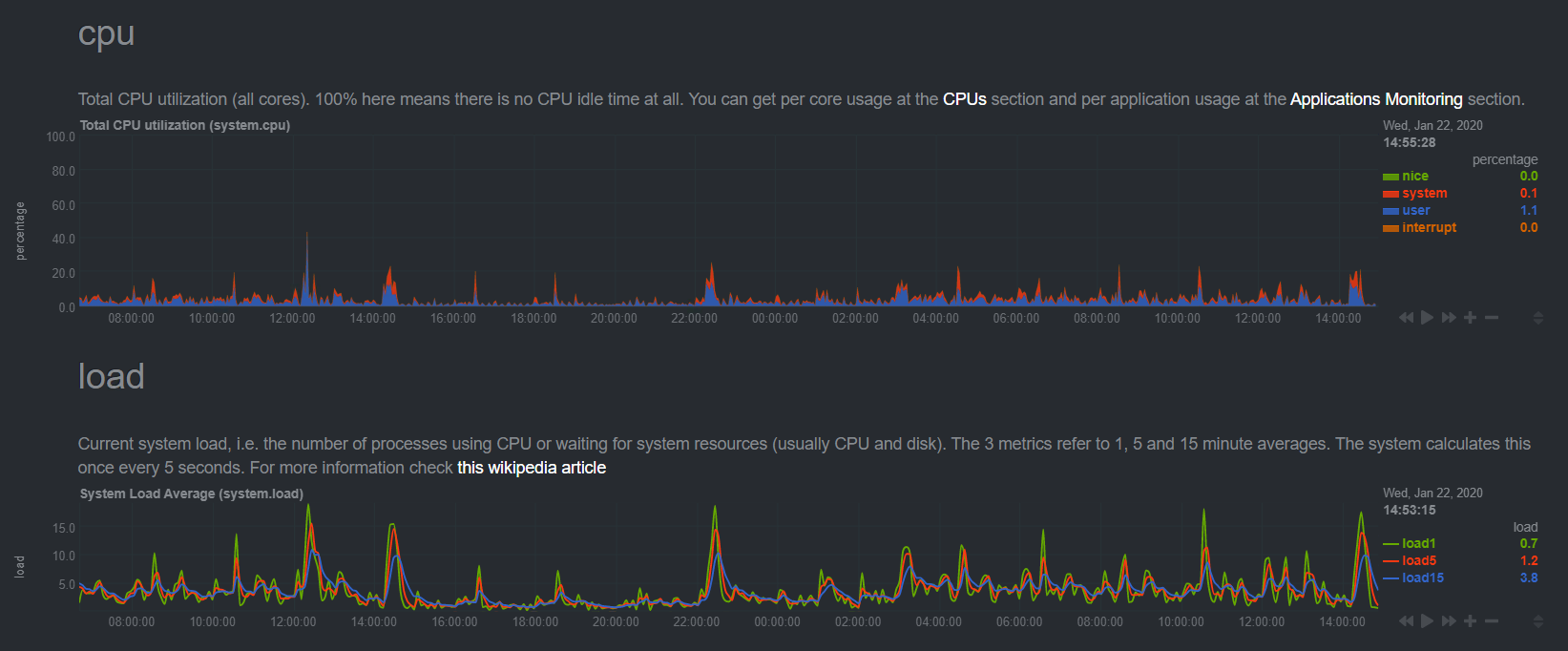

So, if I've understood correctly, the purpose of this patch is to improve performance on a multi-CPU system, which has the kqueue() function. Most notably, FreeBSD? I launched a FreeBSD 10.3 instance on Amazon EC2 (ami-e0682b80), on a m4.10xlarge instance. That's a 40 core system, biggest available, I believe. I built PostgreSQL master on it, and ran pgbench to benchmark: pgbench -i -s 200 postgres pgbench -M prepared -j 36 -c 36 -S postgres -T20 -P1 I set shared_buffers to 10 GB, so that the whole database fits in cache. I tested that with and without kqueue-v5.patch Result: I don't see any difference in performance. pgbench reports between 80,000 and 97,000 TPS, with or without the patch: [ec2-user@ip-172-31-17-174 ~/postgresql]$ ~/pgsql-install/bin/pgbench -M prepared -j 36 -c 36 -S postgres -T20 -P1 starting vacuum...end. progress: 1.0 s, 94537.1 tps, lat 0.368 ms stddev 0.145 progress: 2.0 s, 96745.9 tps, lat 0.368 ms stddev 0.143 progress: 3.0 s, 93870.1 tps, lat 0.380 ms stddev 0.146 progress: 4.0 s, 89482.9 tps, lat 0.399 ms stddev 0.146 progress: 5.0 s, 87815.0 tps, lat 0.406 ms stddev 0.148 progress: 6.0 s, 86415.5 tps, lat 0.413 ms stddev 0.145 progress: 7.0 s, 86011.0 tps, lat 0.415 ms stddev 0.147 progress: 8.0 s, 84923.0 tps, lat 0.420 ms stddev 0.147 progress: 9.0 s, 84596.6 tps, lat 0.422 ms stddev 0.146 progress: 10.0 s, 84537.7 tps, lat 0.422 ms stddev 0.146 progress: 11.0 s, 83910.5 tps, lat 0.425 ms stddev 0.150 progress: 12.0 s, 83738.2 tps, lat 0.426 ms stddev 0.150 progress: 13.0 s, 83837.5 tps, lat 0.426 ms stddev 0.147 progress: 14.0 s, 83578.4 tps, lat 0.427 ms stddev 0.147 progress: 15.0 s, 83609.5 tps, lat 0.427 ms stddev 0.148 progress: 16.0 s, 83423.5 tps, lat 0.428 ms stddev 0.151 progress: 17.0 s, 83318.2 tps, lat 0.428 ms stddev 0.149 progress: 18.0 s, 82992.7 tps, lat 0.430 ms stddev 0.149 progress: 19.0 s, 83155.9 tps, lat 0.429 ms stddev 0.151 progress: 20.0 s, 83209.0 tps, lat 0.429 ms stddev 0.152 transaction type: <builtin: select only> scaling factor: 200 query mode: prepared number of clients: 36 number of threads: 36 duration: 20 s number of transactions actually processed: 1723759 latency average = 0.413 ms latency stddev = 0.149 ms tps = 86124.484867 (including connections establishing) tps = 86208.458034 (excluding connections establishing) Is this test setup reasonable? I know very little about FreeBSD, I'm afraid, so I don't know how to profile or test that further than that. If there's no measurable difference in performance, between kqueue() and poll(), I think we should forget about this. If there's a FreeBSD hacker out there that can demonstrate better results, I'm all for committing this, but I'm reluctant to add code if no-one can show the benefit. - Heikki

Heikki Linnakangas <hlinnaka@iki.fi> writes:

> So, if I've understood correctly, the purpose of this patch is to

> improve performance on a multi-CPU system, which has the kqueue()

> function. Most notably, FreeBSD?

OS X also has this, so it might be worth trying on a multi-CPU Mac.

> If there's no measurable difference in performance, between kqueue() and

> poll(), I think we should forget about this.

I agree that we shouldn't add this unless it's demonstrably a win.

No opinion on whether your test is adequate.

regards, tom lane

On 09/13/2016 04:33 PM, Tom Lane wrote: > Heikki Linnakangas <hlinnaka@iki.fi> writes: >> So, if I've understood correctly, the purpose of this patch is to >> improve performance on a multi-CPU system, which has the kqueue() >> function. Most notably, FreeBSD? > > OS X also has this, so it might be worth trying on a multi-CPU Mac. > >> If there's no measurable difference in performance, between kqueue() and >> poll(), I think we should forget about this. > > I agree that we shouldn't add this unless it's demonstrably a win. > No opinion on whether your test is adequate. I'm marking this as "Returned with Feedback", waiting for someone to post test results that show a positive performance benefit from this. - Heikki

Hi, On 2016-09-13 16:08:39 +0300, Heikki Linnakangas wrote: > So, if I've understood correctly, the purpose of this patch is to improve > performance on a multi-CPU system, which has the kqueue() function. Most > notably, FreeBSD? I think it's not necessarily about the current system, but more about future uses of the WaitEventSet stuff. Some of that is going to use a lot more sockets. E.g. doing a parallel append over FDWs. > I launched a FreeBSD 10.3 instance on Amazon EC2 (ami-e0682b80), on a > m4.10xlarge instance. That's a 40 core system, biggest available, I believe. > I built PostgreSQL master on it, and ran pgbench to benchmark: > > pgbench -i -s 200 postgres > pgbench -M prepared -j 36 -c 36 -S postgres -T20 -P1 This seems likely to actually seldomly exercise the relevant code path. We only do the poll()/epoll_wait()/... when a read() doesn't return anything, but that seems likely to seldomly occur here. Using a lower thread count and a lot higher client count might change that. Note that the case where poll vs. epoll made a large difference (after the regression due to ac1d7945f86) on linux was only on fairly large machines, with high clients counts. Greetings, Andres Freund

On 13 September 2016 at 08:08, Heikki Linnakangas <hlinnaka@iki.fi> wrote: > So, if I've understood correctly, the purpose of this patch is to improve > performance on a multi-CPU system, which has the kqueue() function. Most > notably, FreeBSD? I'm getting a little fried from "self-documenting" patches, from multiple sources. I think we should make it a firm requirement to explain what a patch is actually about, with extra points for including with it a test that allows us to validate that. We don't have enough committer time to waste on such things. -- Simon Riggs http://www.2ndQuadrant.com/ PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

On Tue, Sep 13, 2016 at 11:36 AM, Simon Riggs <simon@2ndquadrant.com> wrote: > On 13 September 2016 at 08:08, Heikki Linnakangas <hlinnaka@iki.fi> wrote: >> So, if I've understood correctly, the purpose of this patch is to improve >> performance on a multi-CPU system, which has the kqueue() function. Most >> notably, FreeBSD? > > I'm getting a little fried from "self-documenting" patches, from > multiple sources. > > I think we should make it a firm requirement to explain what a patch > is actually about, with extra points for including with it a test that > allows us to validate that. We don't have enough committer time to > waste on such things. You've complained about this a whole bunch of times recently, but in most of those cases I didn't think there was any real unclarity. I agree that it's a good idea for a patch to be submitted with suitable submission notes, but it also isn't reasonable to expect those submission notes to be reposted with every single version of every patch. Indeed, I'd find that pretty annoying. Thomas linked back to the previous thread where this was discussed, which seems more or less sufficient. If committers are too busy to click on links in the patch submission emails, they have no business committing anything. -- Robert Haas EnterpriseDB: http://www.enterprisedb.com The Enterprise PostgreSQL Company

Andres Freund <andres@anarazel.de> writes:

> On 2016-09-13 16:08:39 +0300, Heikki Linnakangas wrote:

>> So, if I've understood correctly, the purpose of this patch is to improve

>> performance on a multi-CPU system, which has the kqueue() function. Most

>> notably, FreeBSD?

> I think it's not necessarily about the current system, but more about

> future uses of the WaitEventSet stuff. Some of that is going to use a

> lot more sockets. E.g. doing a parallel append over FDWs.

All fine, but the burden of proof has to be on the patch to show that

it does something significant. We don't want to be carrying around

platform-specific code, which necessarily has higher maintenance cost

than other code, without a darn good reason.

Also, if it's only a win on machines with dozens of CPUs, how many

people are running *BSD on that kind of iron? I think Linux is by

far the dominant kernel for such hardware. For sure Apple isn't

selling any machines like that.

regards, tom lane

On 2016-09-13 12:43:36 -0400, Tom Lane wrote: > > I think it's not necessarily about the current system, but more about > > future uses of the WaitEventSet stuff. Some of that is going to use a > > lot more sockets. E.g. doing a parallel append over FDWs. (note that I'm talking about network sockets not cpu sockets here) > All fine, but the burden of proof has to be on the patch to show that > it does something significant. We don't want to be carrying around > platform-specific code, which necessarily has higher maintenance cost > than other code, without a darn good reason. No argument there. > Also, if it's only a win on machines with dozens of CPUs, how many > people are running *BSD on that kind of iron? I think Linux is by > far the dominant kernel for such hardware. For sure Apple isn't > selling any machines like that. I'm not sure you need quite that big a machine, if you test a workload that currently reaches the poll(). Regards, Andres

Andres Freund <andres@anarazel.de> writes: > On 2016-09-13 12:43:36 -0400, Tom Lane wrote: >> Also, if it's only a win on machines with dozens of CPUs, how many >> people are running *BSD on that kind of iron? I think Linux is by >> far the dominant kernel for such hardware. For sure Apple isn't >> selling any machines like that. > I'm not sure you need quite that big a machine, if you test a workload > that currently reaches the poll(). Well, Thomas stated in https://www.postgresql.org/message-id/CAEepm%3D1CwuAq35FtVBTZO-mnGFH1xEFtDpKQOf_b6WoEmdZZHA%40mail.gmail.com that he hadn't been able to measure any performance difference, and I assume he was trying test cases from the WaitEventSet thread. Also I notice that the WaitEventSet thread started with a simple pgbench test, so I don't really buy the claim that that's not a way that will reach the problem. I'd be happy to see this go in if it can be shown to provide a measurable performance improvement, but so far we have only guesses that someday it *might* make a difference. That's not good enough to add to our maintenance burden IMO. Anyway, the patch is in the archives now, so it won't be hard to resurrect if the situation changes. regards, tom lane

On 2016-09-13 14:47:08 -0400, Tom Lane wrote: > Also I notice that the WaitEventSet thread started with a simple > pgbench test, so I don't really buy the claim that that's not a > way that will reach the problem. You can reach it, but not when using 1 core:one pgbench thread:one client connection, there need to be more connections than that. At least that was my observation on x86 / linux. Andres

Andres Freund <andres@anarazel.de> writes:

> On 2016-09-13 14:47:08 -0400, Tom Lane wrote:

>> Also I notice that the WaitEventSet thread started with a simple

>> pgbench test, so I don't really buy the claim that that's not a

>> way that will reach the problem.

> You can reach it, but not when using 1 core:one pgbench thread:one

> client connection, there need to be more connections than that. At least

> that was my observation on x86 / linux.

Well, that original test was

>> I tried to run pgbench -s 1000 -j 48 -c 48 -S -M prepared on 70 CPU-core

>> machine:

so no, not 1 client ;-)

Anyway, I decided to put my money where my mouth was and run my own

benchmark. On my couple-year-old Macbook Pro running OS X 10.11.6,

using a straight build of today's HEAD, asserts disabled, fsync off

but no other parameters changed, I did "pgbench -i -s 100" and then

did this a few times:pgbench -T 60 -j 4 -c 4 -M prepared -S bench

(It's a 4-core CPU so I saw little point in pressing harder than

that.) Median of 3 runs was 56028 TPS. Repeating the runs with

kqueue-v5.patch applied, I got a median of 58975 TPS, or 5% better.

Run-to-run variation was only around 1% in each case.

So that's not a huge improvement, but it's clearly above the noise

floor, and this laptop is not what anyone would use for production

work eh? Presumably you could show even better results on something

closer to server-grade hardware with more active clients.

So at this point I'm wondering why Thomas and Heikki could not measure

any win. Based on my results it should be easy. Is it possible that

OS X is better tuned for multi-CPU hardware than FreeBSD?

regards, tom lane

On 2016-09-13 15:37:22 -0400, Tom Lane wrote: > Andres Freund <andres@anarazel.de> writes: > > On 2016-09-13 14:47:08 -0400, Tom Lane wrote: > >> Also I notice that the WaitEventSet thread started with a simple > >> pgbench test, so I don't really buy the claim that that's not a > >> way that will reach the problem. > > > You can reach it, but not when using 1 core:one pgbench thread:one > > client connection, there need to be more connections than that. At least > > that was my observation on x86 / linux. > > Well, that original test was > > >> I tried to run pgbench -s 1000 -j 48 -c 48 -S -M prepared on 70 CPU-core > >> machine: > > so no, not 1 client ;-) What I meant wasn't one client, but less than one client per cpu, and using a pgbench thread per backend. That way usually, at least on linux, there'll be a relatively small amount of poll/epoll/whatever, because the recvmsg()s will always have data available. > Anyway, I decided to put my money where my mouth was and run my own > benchmark. Cool. > (It's a 4-core CPU so I saw little point in pressing harder than > that.) I think in reality most busy machines, were performance and scalability matter, are overcommitted in the number of connections vs. cores. And if you look at throughput graphs that makes sense; they tend to increase considerably after reaching #hardware-threads, even if all connections are full throttle busy. It might not make sense if you just run large analytics queries, or if you want the lowest latency possible, but in everything else, the reality is that machines are often overcommitted for good reason. > So at this point I'm wondering why Thomas and Heikki could not measure > any win. Based on my results it should be easy. Is it possible that > OS X is better tuned for multi-CPU hardware than FreeBSD? Hah! Greetings, Andres Freund

Andres Freund <andres@anarazel.de> writes:

> On 2016-09-13 15:37:22 -0400, Tom Lane wrote:

>> (It's a 4-core CPU so I saw little point in pressing harder than

>> that.)

> I think in reality most busy machines, were performance and scalability

> matter, are overcommitted in the number of connections vs. cores. And

> if you look at throughput graphs that makes sense; they tend to increase

> considerably after reaching #hardware-threads, even if all connections

> are full throttle busy.

At -j 10 -c 10, all else the same, I get 84928 TPS on HEAD and 90357

with the patch, so about 6% better.

>> So at this point I'm wondering why Thomas and Heikki could not measure

>> any win. Based on my results it should be easy. Is it possible that

>> OS X is better tuned for multi-CPU hardware than FreeBSD?

> Hah!

Well, there must be some reason why this patch improves matters on OS X

and not FreeBSD ...

regards, tom lane

I wrote: > At -j 10 -c 10, all else the same, I get 84928 TPS on HEAD and 90357 > with the patch, so about 6% better. And at -j 1 -c 1, I get 22390 and 24040 TPS, or about 7% better with the patch. So what I am seeing on OS X isn't contention of any sort, but just a straight speedup that's independent of the number of clients (at least up to 10). Probably this represents less setup/teardown cost for kqueue() waits than poll() waits. So you could spin this as "FreeBSD's poll() implementation is better than OS X's", or as "FreeBSD's kqueue() implementation is worse than OS X's", but either way I do not think we're seeing the same issue that was originally reported against Linux, where there was no visible problem at all till you got to a couple dozen clients, cf https://www.postgresql.org/message-id/CAB-SwXbPmfpgL6N4Ro4BbGyqXEqqzx56intHHBCfvpbFUx1DNA%40mail.gmail.com I'm inclined to think the kqueue patch is worth applying just on the grounds that it makes things better on OS X and doesn't seem to hurt on FreeBSD. Whether anyone would ever get to the point of seeing intra-kernel contention on these platforms is hard to predict, but we'd be ahead of the curve if so. It would be good for someone else to reproduce my results though. For one thing, 5%-ish is not that far above the noise level; maybe what I'm measuring here is just good luck from relocation of critical loops into more cache-line-friendly locations. regards, tom lane

On Wed, Sep 14, 2016 at 12:06 AM, Tom Lane <tgl@sss.pgh.pa.us> wrote: > I wrote: >> At -j 10 -c 10, all else the same, I get 84928 TPS on HEAD and 90357 >> with the patch, so about 6% better. > > And at -j 1 -c 1, I get 22390 and 24040 TPS, or about 7% better with > the patch. So what I am seeing on OS X isn't contention of any sort, > but just a straight speedup that's independent of the number of clients > (at least up to 10). Probably this represents less setup/teardown cost > for kqueue() waits than poll() waits. Thanks for running all these tests. I hadn't considered OS X performance. > So you could spin this as "FreeBSD's poll() implementation is better than > OS X's", or as "FreeBSD's kqueue() implementation is worse than OS X's", > but either way I do not think we're seeing the same issue that was > originally reported against Linux, where there was no visible problem at > all till you got to a couple dozen clients, cf > > https://www.postgresql.org/message-id/CAB-SwXbPmfpgL6N4Ro4BbGyqXEqqzx56intHHBCfvpbFUx1DNA%40mail.gmail.com > > I'm inclined to think the kqueue patch is worth applying just on the > grounds that it makes things better on OS X and doesn't seem to hurt > on FreeBSD. Whether anyone would ever get to the point of seeing > intra-kernel contention on these platforms is hard to predict, but > we'd be ahead of the curve if so. I was originally thinking of this as simply the obvious missing implementation of Andres's WaitEventSet API which would surely pay off later as we do more with that API (asynchronous execution with many remote nodes for sharding, built-in connection pooling/admission control for large numbers of sockets?, ...). I wasn't really expecting it to show performance increases in simple one or two pipe/socket cases on small core count machines, and it's interesting that it clearly does on OS X. > It would be good for someone else to reproduce my results though. > For one thing, 5%-ish is not that far above the noise level; maybe > what I'm measuring here is just good luck from relocation of critical > loops into more cache-line-friendly locations. Similar results here on a 4 core 2.2GHz Core i7 MacBook Pro running OS X 10.11.5. With default settings except fsync = off, I ran pgbench -i -s 100, then took the median result of three runs of pgbench -T 60 -j 4 -c 4 -M prepared -S. I used two different compilers in case it helps to see results with different random instruction cache effects, and got the following numbers: Apple clang 703.0.31: 51654 TPS -> 55739 TPS = 7.9% improvement GCC 6.1.0 from MacPorts: 52552 TPS -> 55143 TPS = 4.9% improvement I reran the tests under FreeBSD 10.3 on a 4 core laptop and again saw absolutely no measurable difference at 1, 4 or 24 clients. Maybe a big enough server could be made to contend on the postmaster pipe's selinfo->si_mtx, in selrecord(), in pipe_poll() -- maybe that'd be directly equivalent to what happened on multi-socket Linux with poll(), but I don't know. -- Thomas Munro http://www.enterprisedb.com

On Wed, Sep 14, 2016 at 7:06 AM, Tom Lane <tgl@sss.pgh.pa.us> wrote: > It would be good for someone else to reproduce my results though. > For one thing, 5%-ish is not that far above the noise level; maybe > what I'm measuring here is just good luck from relocation of critical > loops into more cache-line-friendly locations. From an OSX laptop with -S, -c 1 and -M prepared (9 runs, removed the three best and three worst): - HEAD: 9356/9343/9369 - HEAD + patch: 9433/9413/9461.071168 This laptop has a lot of I/O overhead... Still there is a slight improvement here as well. Looking at the progress report, per-second TPS gets easier more frequently into 9500~9600 TPS with the patch. So at least I am seeing something. -- Michael

Michael Paquier <michael.paquier@gmail.com> writes:

> From an OSX laptop with -S, -c 1 and -M prepared (9 runs, removed the

> three best and three worst):

> - HEAD: 9356/9343/9369

> - HEAD + patch: 9433/9413/9461.071168

> This laptop has a lot of I/O overhead... Still there is a slight

> improvement here as well. Looking at the progress report, per-second

> TPS gets easier more frequently into 9500~9600 TPS with the patch. So

> at least I am seeing something.

Which OSX version exactly?

regards, tom lane

On Wed, Sep 14, 2016 at 3:32 PM, Tom Lane <tgl@sss.pgh.pa.us> wrote: > Michael Paquier <michael.paquier@gmail.com> writes: >> From an OSX laptop with -S, -c 1 and -M prepared (9 runs, removed the >> three best and three worst): >> - HEAD: 9356/9343/9369 >> - HEAD + patch: 9433/9413/9461.071168 >> This laptop has a lot of I/O overhead... Still there is a slight >> improvement here as well. Looking at the progress report, per-second >> TPS gets easier more frequently into 9500~9600 TPS with the patch. So >> at least I am seeing something. > > Which OSX version exactly? El Capitan 10.11.6. With -s 20 (300MB) and 1GB of shared_buffers so as everything is on memory. Actually re-running the tests now with no VMs around and no apps, I am getting close to 9650~9700TPS with patch, and 9300~9400TPS on HEAD, so that's unlikely only noise. -- Michael

Hi, On 14/09/2016 00:06, Tom Lane wrote: > I'm inclined to think the kqueue patch is worth applying just on the > grounds that it makes things better on OS X and doesn't seem to hurt > on FreeBSD. Whether anyone would ever get to the point of seeing > intra-kernel contention on these platforms is hard to predict, but > we'd be ahead of the curve if so. > > It would be good for someone else to reproduce my results though. > For one thing, 5%-ish is not that far above the noise level; maybe > what I'm measuring here is just good luck from relocation of critical > loops into more cache-line-friendly locations. FWIW, I've tested HEAD vs patch on a 2-cpu low end NetBSD 7.0 i386 machine. HEAD: 1890/1935/1889 tps kqueue: 1905/1957/1932 tps no weird surprises, and basically no differences either. Cheers -- Matteo Beccati Development & Consulting - http://www.beccati.com/

On Wed, Sep 14, 2016 at 9:09 AM, Matteo Beccati <php@beccati.com> wrote:

Hi,

On 14/09/2016 00:06, Tom Lane wrote:I'm inclined to think the kqueue patch is worth applying just on the

grounds that it makes things better on OS X and doesn't seem to hurt

on FreeBSD. Whether anyone would ever get to the point of seeing

intra-kernel contention on these platforms is hard to predict, but

we'd be ahead of the curve if so.

It would be good for someone else to reproduce my results though.

For one thing, 5%-ish is not that far above the noise level; maybe

what I'm measuring here is just good luck from relocation of critical

loops into more cache-line-friendly locations.

FWIW, I've tested HEAD vs patch on a 2-cpu low end NetBSD 7.0 i386 machine.

HEAD: 1890/1935/1889 tps

kqueue: 1905/1957/1932 tps

no weird surprises, and basically no differences either.

Cheers

--

Matteo Beccati

Development & Consulting - http://www.beccati.com/

Thomas Munro brought up in #postgresql on freenode needing someone to test a patch on a larger FreeBSD server. I've got a pretty decent machine (3.1Ghz Quad Core Xeon E3-1220V3, 16GB ECC RAM, ZFS mirror on WD Red HDD) so offered to give it a try.

Bench setup was:

pgbench -i -s 100 -d postgres

pgbench -i -s 100 -d postgres

I ran this against 96rc1 instead of HEAD like most of the others in this thread seem to have done. Not sure if that makes a difference and can re-run if needed.

With higher concurrency, this seems to cause decreased performance. You can tell which of the runs is the kqueue patch by looking at the path to pgbench.

With higher concurrency, this seems to cause decreased performance. You can tell which of the runs is the kqueue patch by looking at the path to pgbench.

SINGLE PROCESS

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1547387

latency average: 0.039 ms

tps = 25789.750236 (including connections establishing)

tps = 25791.018293 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1549442

latency average: 0.039 ms

tps = 25823.981255 (including connections establishing)

tps = 25825.189871 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1547936

latency average: 0.039 ms

tps = 25798.572583 (including connections establishing)

tps = 25799.917170 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1520722

latency average: 0.039 ms

tps = 25343.122533 (including connections establishing)

tps = 25344.357116 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496~

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1549282

latency average: 0.039 ms

tps = 25821.107595 (including connections establishing)

tps = 25822.407310 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496~

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1541907

latency average: 0.039 ms

tps = 25698.025983 (including connections establishing)

tps = 25699.270663 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1547387

latency average: 0.039 ms

tps = 25789.750236 (including connections establishing)

tps = 25791.018293 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1549442

latency average: 0.039 ms

tps = 25823.981255 (including connections establishing)

tps = 25825.189871 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1547936

latency average: 0.039 ms

tps = 25798.572583 (including connections establishing)

tps = 25799.917170 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1520722

latency average: 0.039 ms

tps = 25343.122533 (including connections establishing)

tps = 25344.357116 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496~

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1549282

latency average: 0.039 ms

tps = 25821.107595 (including connections establishing)

tps = 25822.407310 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S postgres -p 5496~

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1541907

latency average: 0.039 ms

tps = 25698.025983 (including connections establishing)

tps = 25699.270663 (excluding connections establishing)

FOUR

/home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 4282185

latency average: 0.056 ms

tps = 71369.146931 (including connections establishing)

tps = 71372.646243 (excluding connections establishing)

[keith@corpus ~/postgresql-9.6rc1_kqueue]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 4777596

latency average: 0.050 ms

tps = 79625.214521 (including connections establishing)

tps = 79629.800123 (excluding connections establishing)

[keith@corpus ~/postgresql-9.6rc1_kqueue]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 4809132

latency average: 0.050 ms

tps = 80151.803249 (including connections establishing)

tps = 80155.903203 (excluding connections establishing)

/home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5114286

latency average: 0.047 ms

tps = 85236.858383 (including connections establishing)

tps = 85241.847800 (excluding connections establishing)

/home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5600194

latency average: 0.043 ms

tps = 93335.508864 (including connections establishing)

tps = 93340.970416 (excluding connections establishing)

/home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5606962

latency average: 0.043 ms

tps = 93447.905764 (including connections establishing)

tps = 93454.077142 (excluding connections establishing)

/home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 4282185

latency average: 0.056 ms

tps = 71369.146931 (including connections establishing)

tps = 71372.646243 (excluding connections establishing)

[keith@corpus ~/postgresql-9.6rc1_kqueue]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 4777596

latency average: 0.050 ms

tps = 79625.214521 (including connections establishing)

tps = 79629.800123 (excluding connections establishing)

[keith@corpus ~/postgresql-9.6rc1_kqueue]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 4809132

latency average: 0.050 ms

tps = 80151.803249 (including connections establishing)

tps = 80155.903203 (excluding connections establishing)

/home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5114286

latency average: 0.047 ms

tps = 85236.858383 (including connections establishing)

tps = 85241.847800 (excluding connections establishing)

/home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5600194

latency average: 0.043 ms

tps = 93335.508864 (including connections establishing)

tps = 93340.970416 (excluding connections establishing)

/home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5606962

latency average: 0.043 ms

tps = 93447.905764 (including connections establishing)

tps = 93454.077142 (excluding connections establishing)

SIXTY-FOUR

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4084213

latency average: 0.940 ms

tps = 67633.476871 (including connections establishing)

tps = 67751.865998 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4119994

latency average: 0.932 ms

tps = 68474.847365 (including connections establishing)

tps = 68540.221835 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4068071

latency average: 0.944 ms

tps = 67192.603129 (including connections establishing)

tps = 67254.760177 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4281302

latency average: 0.897 ms

tps = 70147.847337 (including connections establishing)

tps = 70389.283564 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4573114

latency average: 0.840 ms

tps = 74848.884475 (including connections establishing)

tps = 75102.862539 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4341447

latency average: 0.884 ms

tps = 72350.152281 (including connections establishing)

tps = 72421.831179 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4084213

latency average: 0.940 ms

tps = 67633.476871 (including connections establishing)

tps = 67751.865998 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4119994

latency average: 0.932 ms

tps = 68474.847365 (including connections establishing)

tps = 68540.221835 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1_kqueue/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4068071

latency average: 0.944 ms

tps = 67192.603129 (including connections establishing)

tps = 67254.760177 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4281302

latency average: 0.897 ms

tps = 70147.847337 (including connections establishing)

tps = 70389.283564 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4573114

latency average: 0.840 ms

tps = 74848.884475 (including connections establishing)

tps = 75102.862539 (excluding connections establishing)

[keith@corpus /tank/pgdata]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S postgres -p 5496

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4341447

latency average: 0.884 ms

tps = 72350.152281 (including connections establishing)

tps = 72421.831179 (excluding connections establishing)

On Thu, Sep 15, 2016 at 10:48 AM, Keith Fiske <keith@omniti.com> wrote: > Thomas Munro brought up in #postgresql on freenode needing someone to test a > patch on a larger FreeBSD server. I've got a pretty decent machine (3.1Ghz > Quad Core Xeon E3-1220V3, 16GB ECC RAM, ZFS mirror on WD Red HDD) so offered > to give it a try. > > Bench setup was: > pgbench -i -s 100 -d postgres > > I ran this against 96rc1 instead of HEAD like most of the others in this > thread seem to have done. Not sure if that makes a difference and can re-run > if needed. > With higher concurrency, this seems to cause decreased performance. You can > tell which of the runs is the kqueue patch by looking at the path to > pgbench. Thanks Keith. So to summarise, you saw no change with 1 client, but with 4 clients you saw a significant drop in performance (~93K TPS -> ~80K TPS), and a smaller drop for 64 clients (~72 TPS -> ~68K TPS). These results seem to be a nail in the coffin for this patch for now. Thanks to everyone who tested. I might be back in a later commitfest if I can figure out why and how to fix it. -- Thomas Munro http://www.enterprisedb.com

On Thu, Sep 15, 2016 at 11:04 AM, Thomas Munro <thomas.munro@enterprisedb.com> wrote: > On Thu, Sep 15, 2016 at 10:48 AM, Keith Fiske <keith@omniti.com> wrote: >> Thomas Munro brought up in #postgresql on freenode needing someone to test a >> patch on a larger FreeBSD server. I've got a pretty decent machine (3.1Ghz >> Quad Core Xeon E3-1220V3, 16GB ECC RAM, ZFS mirror on WD Red HDD) so offered >> to give it a try. >> >> Bench setup was: >> pgbench -i -s 100 -d postgres >> >> I ran this against 96rc1 instead of HEAD like most of the others in this >> thread seem to have done. Not sure if that makes a difference and can re-run >> if needed. >> With higher concurrency, this seems to cause decreased performance. You can >> tell which of the runs is the kqueue patch by looking at the path to >> pgbench. > > Thanks Keith. So to summarise, you saw no change with 1 client, but > with 4 clients you saw a significant drop in performance (~93K TPS -> > ~80K TPS), and a smaller drop for 64 clients (~72 TPS -> ~68K TPS). > These results seem to be a nail in the coffin for this patch for now. > > Thanks to everyone who tested. I might be back in a later commitfest > if I can figure out why and how to fix it. Ok, here's a version tweaked to use EVFILT_PROC for postmaster death detection instead of the pipe, as Tom Lane suggested in another thread[1]. The pipe still exists and is used for PostmasterIsAlive(), and also for the race case where kevent discovers that the PID doesn't exist when you try to add it (presumably it died already, but we want to defer the report of that until you call EventSetWait, so in that case we stick the traditional pipe into the kqueue set as before so that it'll fire a readable-because-EOF event then). Still no change measurable on my laptop. Keith, would you be able to test this on your rig and see if it sucks any less than the last one? [1] https://www.postgresql.org/message-id/13774.1473972000%40sss.pgh.pa.us -- Thomas Munro http://www.enterprisedb.com

Вложения

Hi, On 16/09/2016 05:11, Thomas Munro wrote: > Still no change measurable on my laptop. Keith, would you be able to > test this on your rig and see if it sucks any less than the last one? I've tested kqueue-v6.patch on the Celeron NetBSD machine and numbers were constantly lower by about 5-10% vs fairly recent HEAD (same as my last pgbench runs). Cheers -- Matteo Beccati Development & Consulting - http://www.beccati.com/

On Thu, Sep 15, 2016 at 11:11 PM, Thomas Munro <thomas.munro@enterprisedb.com

Ran benchmarks on unaltered 96rc1 again just to be safe. Those are first. Decided to throw a 32 process test in there as well to see if there's anything going on between 4 and 64

~/pgsql96rc1/bin/pgbench -i -s 100 -d pgbench -p 5496

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1543809

latency average: 0.039 ms

tps = 25729.749474 (including connections establishing)

tps = 25731.006414 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1548340

latency average: 0.039 ms

tps = 25796.928387 (including connections establishing)

tps = 25798.275891 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 1 -c 1 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 1

number of threads: 1

duration: 60 s

number of transactions actually processed: 1535072

latency average: 0.039 ms

tps = 25584.182830 (including connections establishing)

tps = 25585.487246 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5621013

latency average: 0.043 ms

tps = 93668.594248 (including connections establishing)

tps = 93674.730914 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5659929

latency average: 0.042 ms

tps = 94293.572928 (including connections establishing)

tps = 94300.500395 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 4 -c 4 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 4

number of threads: 4

duration: 60 s

number of transactions actually processed: 5649572

latency average: 0.042 ms

tps = 94115.854165 (including connections establishing)

tps = 94123.436211 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 32 -c 32 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 32

number of threads: 32

duration: 60 s

number of transactions actually processed: 5196336

latency average: 0.369 ms

tps = 86570.696138 (including connections establishing)

tps = 86608.648579 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 32 -c 32 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 32

number of threads: 32

duration: 60 s

number of transactions actually processed: 5202443

latency average: 0.369 ms

tps = 86624.724577 (including connections establishing)

tps = 86664.848857 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 32 -c 32 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 32

number of threads: 32

duration: 60 s

number of transactions actually processed: 5198412

latency average: 0.369 ms

tps = 86637.730825 (including connections establishing)

tps = 86668.706105 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4790285

latency average: 0.802 ms

tps = 79800.369679 (including connections establishing)

tps = 79941.243428 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4852921

latency average: 0.791 ms

tps = 79924.873678 (including connections establishing)

tps = 80179.182200 (excluding connections establishing)

[keith@corpus ~]$ /home/keith/pgsql96rc1/bin/pgbench -T 60 -j 64 -c 64 -M prepared -S -p 5496 pgbench

starting vacuum...end.

transaction type: <builtin: select only>

scaling factor: 100

query mode: prepared

number of clients: 64

number of threads: 64

duration: 60 s

number of transactions actually processed: 4672965

latency average: 0.822 ms