Обсуждение: Using quicksort for every external sort run

I'll start a new thread for this, since my external sorting patch has

now evolved well past the original "quicksort with spillover"

idea...although not quite how I anticipated it would. It seems like

I've reached a good point to get some feedback.

I attach a patch series featuring a new, more comprehensive approach

to quicksorting runs during external sorts. What I have now still

includes "quicksort with spillover", but it's just a part of a larger

project. I am quite happy with the improvements in performance shown

by my testing, which I go into below.

Controversy

=========

A few weeks ago, I did not anticipate that I'd propose that

replacement selection sort be used far less (only somewhat less, since

I was only somewhat doubtful about the algorithm at the time). I had

originally planned on continuing to *always* use it for the first run,

to make "quicksort with spillover" possible (thereby sometimes

avoiding significant I/O by not spilling most tuples), but also to

make cases always considered sympathetic to replacement selection

continue to happen. I thought that second or subsequent runs could

still be quicksorted, but that I must still care about this latter

category, the traditional sympathetic cases. This latter category is

mostly just one important property of replacement selection: even

without a strong logical/physical correlation, the algorithm tends to

produce runs that are about twice the size of work_mem. (It's also

notable that replacement selection only produces one run with mostly

presorted input, even where input far exceeds work_mem, which is a

neat trick.)

I wanted to avoid controversy, but the case for the controversy is too

strong for me to ignore: despite these upsides, replacement selection

is obsolete, and should usually be avoided.

Replacement selection sort still has a role to play in making

"quicksort with spillover" possible (when a sympathetic case is

*anticipated*), but other than that it seems generally inferior to a

simple hybrid sort-merge strategy on modern hardware. By modern

hardware, I mean anything manufactured in at least the last 20 years.

We've already seen that the algorithm's use of a heap works badly with

modern CPU caches, but that is just one factor contributing to its

obsolescence.

The big selling point of replacement selection sort in the 20th

century was that it sometimes avoided multi-pass sorts as compared to

a simple sort-merge strategy (remember when tuplesort.c always used 7

tapes? When you need to use 7 actual magnetic tapes, rewinding is

expensive and in general this matters a lot!). We all know that memory

capacity has grown enormously since then, but we must also consider

another factor: At the same time, a simple hybrid sort-merge

strategy's capacity to more or less get the important detail here

right -- to avoid a multi-pass sort -- has increased quadratically

(relative to work_mem/memory capacity). As an example, testing shows

that for a datum tuplesort that requires about 2300MB of work_mem to

be completed as a simple internal sort this patch only needs 30MB to

just do one pass (see benchmark query below). I've mostly regressed

that particular property of tuplesort (it used to be less than 30MB),

but that's clearly the wrong thing to worry about for all kinds of

reasons, probably even in the unimportant cases now forced to do

multiple passes.

Multi-pass sorts

---------------------

I believe, in general, that we should consider a multi-pass sort to be

a kind of inherently suspect thing these days, in the same way that

checkpoints occurring 5 seconds apart are: not actually abnormal, but

something that we should regard suspiciously. Can you really not

afford enough work_mem to only do one pass? Does it really make sense

to add far more I/O and CPU costs to avoid that other tiny memory

capacity cost?

In theory, the answer could be "yes", but it seems highly unlikely.

Not only is very little memory required to avoid a multi-pass merge

step, but as described above the amount required grows very slowly

relative to linear growth in input. I propose to add a

checkpoint_warning style warning (with a checkpoint_warning style GUC

to control it). ISTM that these days, multi-pass merges are like

saving $2 on replacing a stairwell light bulb, at the expense of

regularly stumbling down the stairs in the dark. It shouldn't matter

if you have a 50 terabyte decision support database or if you're

paying Heroku a small monthly fee to run a database backing your web

app: simply avoiding multi-pass merges is probably always the most

economical solution, and by a wide margin.

Note that I am not skeptical of polyphase merging itself, even though

it is generally considered to be a complimentary technique to

replacement selection (some less formal writing on external sorting

seemingly fails to draw a sharp distinction). Nothing has changed

there.

Patch, performance

===============

Let's focus on a multi-run sort, that does not use "quicksort with

spillover", since that is all new, and is probably the most compelling

case for very large databases with hundreds of gigabytes of data to

sort.

I think that this patch requires a machine with more I/O bandwidth

than my laptop to get a proper sense of the improvement made. I've

been using a tmpfs temp_tablespace for testing, to simulate this. That

may leave me slightly optimistic about I/O costs, but you can usually

get significantly more sequential I/O bandwidth by adding additional

disks, whereas you cannot really buy new hardware to improve the

situation with excessive CPU cache misses.

Benchmark

---------------

-- Setup, 100 million tuple table with high cardinality int4 column (2

billion possible int4 values)

create table big_high_cardinality_int4 as

select (random() * 2000000000)::int4 s,

'abcdefghijlmn'::text junk

from generate_series(1, 100000000);

-- Make cost model hinting accurate:

analyze big_high_cardinality_int4;

checkpoint;

Let's start by comparing an external sort that uses 1/3 the memory of

an internal sort against the master branch. That's completely unfair

on the patch, of course, but it is a useful indicator of how well

external sorts do overall. Although an external sort surely cannot be

as fast as an internal sort, it might be able to approach an internal

sort's speed when there is plenty of I/O bandwidth. That's a good

thing to aim for, I think.

-- Master (just enough memory for internal sort):

set work_mem = '2300MB';

select count(distinct(s)) from big_high_cardinality;

***** Runtime after stabilization: ~33.6 seconds *****

-- Patch series, but with just over 1/3 the memory:

set work_mem = '800MB';

select count(distinct(s)) from big_high_cardinality;

***** Runtime after stabilization: ~37.1 seconds *****

The patch only takes ~10% more time to execute this query, which seems

very good considering that ~1/3 the work_mem has been put to use.

trace_sort output for patch during execution of this case:

LOG: begin datum sort: workMem = 819200, randomAccess = f

LOG: switching to external sort with 2926 tapes: CPU 0.39s/2.66u sec

elapsed 3.06 sec

LOG: replacement selection avg tuple size 24.00 crossover: 0.85

LOG: hybrid sort-merge in use from row 34952532 with 100000000.00 total rows

LOG: finished quicksorting run 1: CPU 0.39s/8.84u sec elapsed 9.24 sec

LOG: finished writing quicksorted run 1 to tape 0: CPU 0.60s/9.61u

sec elapsed 10.22 sec

LOG: finished quicksorting run 2: CPU 0.87s/18.61u sec elapsed 19.50 sec

LOG: finished writing quicksorted run 2 to tape 1: CPU 1.07s/19.38u

sec elapsed 20.46 sec

LOG: performsort starting: CPU 1.27s/21.79u sec elapsed 23.07 sec

LOG: finished quicksorting run 3: CPU 1.27s/27.07u sec elapsed 28.35 sec

LOG: finished writing quicksorted run 3 to tape 2: CPU 1.47s/27.69u

sec elapsed 29.18 sec

LOG: performsort done (except 3-way final merge): CPU 1.51s/28.54u

sec elapsed 30.07 sec

LOG: external sort ended, 146625 disk blocks used: CPU 1.76s/35.32u

sec elapsed 37.10 sec

Note that the on-tape runs are small relative to CPU costs, so this

query is a bit sympathetic (consider the time spent writing batches

that trace_sort indicates here). CREATE INDEX would not compare so

well with an internal sort, for example, especially if it was a

composite index or something. I've sized work_mem here in a deliberate

way, to make sure there are 3 runs of similar size by the time the

merge step is reached, which makes a small difference in the patch's

favor. All told, this seems like a very significant overall

improvement.

Now, consider master's performance with the same work_mem setting (a

fair test with comparable resource usage for master and patch):

-- Master

set work_mem = '800MB';

select count(distinct(s)) from big_high_cardinality;

***** Runtime after stabilization: ~120.9 seconds *****

The patch is ~3.25x faster than master here, which also seems like a

significant improvement. That's pretty close to the improvement

previously seen for good "quicksort with spillover" cases, but

suitable for every external sort case that doesn't use "quicksort with

spillover". In other words, no variety of external sort is not

significantly improved by the patch.

I think it's safe to suppose that there are also big benefits when

multiple concurrent sort operations run on the same system. For

example, when pg_restore has multiple jobs.

Worst case

---------------

Even with a traditionally sympathetic case for replacement selection

sort, the patch beats replacement selection with multiple on-tape

runs. When experimenting here, I did not forget to account for our

qsort()'s behavior in the event of *perfectly* presorted input

("Bubble sort best case" behavior [1]). Other than that, I have a hard

time thinking of an unsympathetic case for the patch, and could not

find any actual regressions with a fair amount of effort.

Abbreviated keys are not used when merging, but that doesn't seem to

be something that notably counts against the new approach (which will

have shorter runs on average). After all, the reason why abbreviated

keys aren't saved on disk for merging is that they're probably not

very useful when merging. They would resolve far fewer comparisons if

they were used during merging, and having somewhat smaller runs does

not result in significantly more non-abbreviated comparisons, even

when sorting random noise strings.

Avoiding replacement selection *altogether*

=================================

Assuming you agree with my conclusions on replacement selection sort

mostly not being worth it, we need to avoid replacement selection

except when it'll probably allow a "quicksort with spillover". In my

mind, that's now the *only* reason to use replacement selection.

Callers pass a hint to tuplesort indicating how many tuples it is

estimated will ultimately be passed before a sort is performed.

(Typically, this comes from a scan plan node's row estimate, or more

directly from the relcache for things like CREATE INDEX.)

Cost model -- details

----------------------------

Second or subsequent runs *never* use replacement selection -- it is

only *considered* for the first run, right before the possible point

of initial heapification within inittapes(). The cost model is

contained within the new function useselection(). See the second patch

in the series for full details. That's where this is added.

I have a fairly high bar for even using replacement selection for the

first run -- several factors can result in a simple hybrid sort-merge

strategy being used instead of a "quicksort with spillover", because

in general most of the benefit seems to be around CPU cache misses

rather than savings in I/O. Consider my benchmark query above once

more -- with replacement selection used for the first run in the

benchmark case above (e.g., with just the first patch in the series

applied, or setting the "optimize_avoid_selection" debug GUC to

"off"), I found that it took over twice as long to execute, even

though the second-or-subsequent (now smaller) runs were quicksorted

just the same, and were all merged just the same.

The numbers should make it obvious why I gave in to the temptation of

adding an ad-hoc, tuplesort-private cost model. At this point, I'd

rather scrap "quicksort with spillover" (and the use of replacement

selection under all possible circumstances) than scrap the idea of a

cost model. That would make more sense, even though it would give up

on the idea of saving most I/O where the work_mem threshold is only

crossed by a small amount.

Future work

=========

I anticipate a number of other things within the first patch in the

series, some of which are already worked out to some degree.

Asynchronous I/O

-------------------------

This patch leaves open the possibility of using something like

libaio/librt for sorting. That would probably use half of memtuples as

scratch space, while the other half is quicksorted.

Memory prefetching

---------------------------

To test what role memory prefetching is likely to have here, I attach

a custom version of my tuplesort/tuplestore prefetch patch, with

prefetching added to the "quicksort with spillover" and batch dumping

runs WRITETUP()-calling code. This seems to help performance

measurably. However, I guess it shouldn't really be considered as part

of this patch. It can follow the initial commit of the big, base patch

(or will becomes part of the base patch if and when prefetching is

committed first).

cost_sort() changes

--------------------------

I had every intention of making cost_sort() a continuous cost function

as part of this work. This could be justified by "quicksort with

spillover" allowing tuplesort to "blend" from internal to external

sorting as input size is gradually increased. This seemed like

something that would have significant non-obvious benefits in several

other areas. However, I've put off dealing with making any change to

cost_sort() because of concerns about the complexity of overlaying

such changes on top of the tuplesort-private cost model.

I think that this will need to be discussed in a lot more detail. As a

further matter, materialization of sort nodes will probably also

require tweaks to the costing for "quicksort with spillover". Recall

that "quicksort with spillover" can only work for !randomAccess

tuplesort callers.

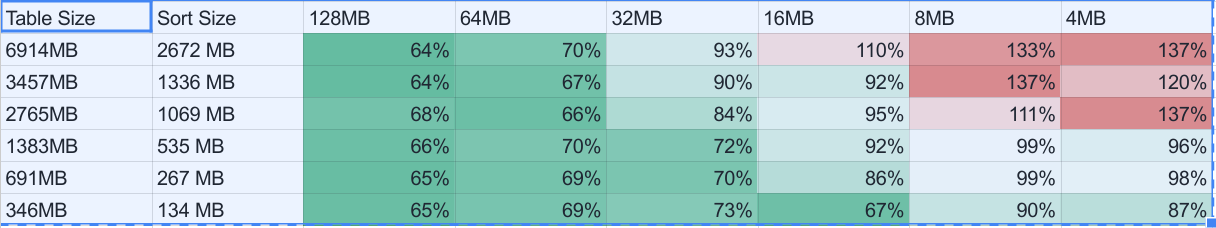

Run size

------------

This patch continues to have tuplesort determine run size based on the

availability of work_mem only. It does not entirely fix the problem of

having work_mem sizing impact performance in counter-intuitive ways.

In other words, smaller work_mem sizes can still be faster. It does

make that general situation much better, though, because quicksort is

a cache oblivious algorithm. Smaller work_mem sizes are sometimes a

bit faster, but never dramatically faster.

In general, the whole idea of making run size as big as possible is

bogus, unless that enables or is likely to enable a "quicksort with

spillover". The caller-supplied row count hint I've added may in the

future be extended to determine optimal run size ahead of time, when

it's perfectly clear (leaving aside misestimation) that a fully

internal sort (or "quicksort with spillover") will not occur. This

will result in faster external sorts where additional work_mem cannot

be put to good use. As a side benefit, external sorts will not be

effectively wasting a large amount of memory.

The cost model we eventually come up with to determine optimal run

size ought to balance certain things. Assuming a one-pass merge step,

then we should balance the time lost waiting on the first run and time

quicksorting the last run with the gradual increase in the cost during

the merge step. Maybe the non-use of abbreviated keys during the merge

step should also be considered. Alternatively, the run size may be

determined by a GUC that is typically sized at drive controller cache

size (e.g. 1GB) when any kind of I/O avoidance for the sort appears

impossible.

[1] Commit a3f0b3d6

--

Peter Geoghegan

Вложения

On Thu, Aug 20, 2015 at 3:24 AM, Peter Geoghegan <pg@heroku.com> wrote: > I believe, in general, that we should consider a multi-pass sort to be > a kind of inherently suspect thing these days, in the same way that > checkpoints occurring 5 seconds apart are: not actually abnormal, but > something that we should regard suspiciously. Can you really not > afford enough work_mem to only do one pass? Does it really make sense > to add far more I/O and CPU costs to avoid that other tiny memory > capacity cost? I think this is the crux of the argument. And I think you're basically, but not entirely, right. The key metric there is not how cheap memory has gotten but rather what the ratio is between the system's memory and disk storage. The use case I think you're leaving out is the classic "data warehouse" with huge disk arrays attached to a single host running massive queries for hours. In that case reducing run size will reduce I/O requirements directly and halving the amount of I/O sort takes will halve the time it takes regardless of cpu efficiency. And I have a suspicion typical data distributions get much better than a 2x speedup. But I think you're basically right that this is the wrong use case to worry about for most users. Even those users that do have large batch queries are probably not processing so much that they should be doing multiple passes. The ones that do are are probably more interested in parallel query, federated databases, column stores, and so on rather than worrying about just how many hours it takes to sort their multiple terabytes on a single processor. I am quite suspicious of quicksort though. It has O(n^2) worst case and I think it's only a matter of time before people start worrying about DOS attacks from users able to influence the data ordering. It's also not very suitable for GPU processing. Quicksort gets most of its advantage from cache efficiency, it isn't a super efficient algorithm otherwise, are there not other cache efficient algorithms to consider? Alternately, has anyone tested whether Timsort would work well? -- greg

Greg Stark <stark@mit.edu> writes:

> Alternately, has anyone tested whether Timsort would work well?

I think that was proposed a few years ago and did not look so good

in simple testing.

regards, tom lane

On 20 August 2015 at 03:24, Peter Geoghegan <pg@heroku.com> wrote:

Can you summarize what this patch does? I understand clearly what it doesn't do...

--

The patch is ~3.25x faster than master

I've tried to read this post twice and both times my work_mem overflowed. ;-)

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

On Thu, Aug 20, 2015 at 6:54 AM, Tom Lane <tgl@sss.pgh.pa.us> wrote: > Greg Stark <stark@mit.edu> writes: >> Alternately, has anyone tested whether Timsort would work well? > > I think that was proposed a few years ago and did not look so good > in simple testing. I tested it in 2012. I got as far as writing a patch. Timsort is very good where comparisons are expensive -- that's why it's especially compelling when your comparator is written in Python. However, when testing it with text, even though there were significantly fewer comparisons, it was still slower than quicksort. Quicksort is cache oblivious, and that's an enormous advantage. This was before abbreviated keys; these days, the difference must be larger. -- Peter Geoghegan

On Thu, Aug 20, 2015 at 8:15 AM, Simon Riggs <simon@2ndquadrant.com> wrote: > On 20 August 2015 at 03:24, Peter Geoghegan <pg@heroku.com> wrote: >> >> >> The patch is ~3.25x faster than master > > > I've tried to read this post twice and both times my work_mem overflowed. > ;-) > > Can you summarize what this patch does? I understand clearly what it doesn't > do... The most important thing that it does is always quicksort runs, that are formed by simply filling work_mem with tuples in no particular order, rather than trying to make runs that are twice as large as work_mem on average. That's what the ~3.25x improvement concerned. That's actually a significantly simpler algorithm than replacement selection, and appears to be much faster. You might even say that it's a dumb algorithm, because it is less sophisticated than replacement selection. However, replacement selection tends to use CPU caches very poorly, while its traditional advantages have become dramatically less important due to large main memory sizes in particular. Also, it hurts that we don't currently dump tuples in batches, for several reasons. Better to do memory intense operations in batch, rather than having a huge inner loop, in order to minimize or prevent instruction cache misses. And we can better take advantage of asynchronous I/O. The complicated aspect of considering the patch is whether or not it's okay to not use replacement selection anymore -- is that an appropriate trade-off? The reason that the code has not actually been simplified by this patch is that I still want to use replacement selection for one specific case: when it is anticipated that a "quicksort with spillover" can occur, which is only possible with incremental spilling. That may avoid most I/O, by spilling just a few tuples using a heap/priority queue, and quicksorting everything else. That's compelling when you can manage it, but no reason to always use replacement selection for the first run in the common case where there well be several runs in total. Is that any clearer? To borrow a phrase from the processor architecture community, from a high level this is a "Brainiac versus Speed Demon" [1] trade-off. (I wish that there was a widely accepted name for this trade-off.) [1] http://www.lighterra.com/papers/modernmicroprocessors/#thebrainiacdebate -- Peter Geoghegan

On Thu, Aug 20, 2015 at 10:41 AM, Peter Geoghegan <pg@heroku.com> wrote:

On Thu, Aug 20, 2015 at 8:15 AM, Simon Riggs <simon@2ndquadrant.com> wrote:

> On 20 August 2015 at 03:24, Peter Geoghegan <pg@heroku.com> wrote:

>>

>>

>> The patch is ~3.25x faster than master

>

>

> I've tried to read this post twice and both times my work_mem overflowed.

> ;-)

>

> Can you summarize what this patch does? I understand clearly what it doesn't

> do...

The most important thing that it does is always quicksort runs, that

are formed by simply filling work_mem with tuples in no particular

order, rather than trying to make runs that are twice as large as

work_mem on average. That's what the ~3.25x improvement concerned.

That's actually a significantly simpler algorithm than replacement

selection, and appears to be much faster. You might even say that it's

a dumb algorithm, because it is less sophisticated than replacement

selection. However, replacement selection tends to use CPU caches very

poorly, while its traditional advantages have become dramatically less

important due to large main memory sizes in particular. Also, it hurts

that we don't currently dump tuples in batches, for several reasons.

Better to do memory intense operations in batch, rather than having a

huge inner loop, in order to minimize or prevent instruction cache

misses. And we can better take advantage of asynchronous I/O.

The complicated aspect of considering the patch is whether or not it's

okay to not use replacement selection anymore -- is that an

appropriate trade-off?

The reason that the code has not actually been simplified by this

patch is that I still want to use replacement selection for one

specific case: when it is anticipated that a "quicksort with

spillover" can occur, which is only possible with incremental

spilling. That may avoid most I/O, by spilling just a few tuples using

a heap/priority queue, and quicksorting everything else. That's

compelling when you can manage it, but no reason to always use

replacement selection for the first run in the common case where there

well be several runs in total.

Is that any clearer? To borrow a phrase from the processor

architecture community, from a high level this is a "Brainiac versus

Speed Demon" [1] trade-off. (I wish that there was a widely accepted

name for this trade-off.)

[1] http://www.lighterra.com/papers/modernmicroprocessors/#thebrainiacdebate--

Peter Geoghegan

--

Sent via pgsql-hackers mailing list (pgsql-hackers@postgresql.org)

To make changes to your subscription:

http://www.postgresql.org/mailpref/pgsql-hackers

Hi, Peter,

Just a quick anecdotal evidence. I did similar experiment about three years ago. The conclusion was that if you have SSD, just do quick sort and forget the longer runs, but if you are using hard drives, longer runs is the winner (and safer, to avoid cliffs). I did not experiment with RAID0/5 on many spindles though.

Not limited to sort, more generally, SSD is different enough from HDD, therefore it may worth the effort for backend to "guess" what storage device it has, then choose the right thing to do.

Cheers.

On Thu, Aug 20, 2015 at 12:42 PM, Feng Tian <ftian@vitessedata.com> wrote: > Just a quick anecdotal evidence. I did similar experiment about three years > ago. The conclusion was that if you have SSD, just do quick sort and > forget the longer runs, but if you are using hard drives, longer runs is the > winner (and safer, to avoid cliffs). I did not experiment with RAID0/5 on > many spindles though. > > Not limited to sort, more generally, SSD is different enough from HDD, > therefore it may worth the effort for backend to "guess" what storage device > it has, then choose the right thing to do. The devil is in the details. I cannot really comment on such a general statement. I would be willing to believe that that's true under unrealistic/unrepresentative conditions. Specifically, when multiple passes are required with a sort-merge strategy where that isn't the case with replacement selection. This could happen with a tiny work_mem setting (tiny in an absolute sense more than a relative sense). With an HDD, where sequential I/O is so much faster, this could be enough to make replacement selection win, just as it would have in the 1970s with magnetic tapes. As I've said, the solution is to simply avoid multiple passes, which should be possible in virtually all cases because of the quadratic growth in a classic hybrid sort-merge strategy's capacity to avoid multiple passes (growth relative to work_mem's growth). Once you ensure that, then you probably have a mostly I/O bound workload, which can be made faster by adding sequential I/O capacity (or, on the Postgres internals side, adding asynchronous I/O, or with memory prefetching). You cannot really buy a faster CPU to make a degenerate heapsort faster. -- Peter Geoghegan

On Thu, Aug 20, 2015 at 1:16 PM, Peter Geoghegan <pg@heroku.com> wrote:

On Thu, Aug 20, 2015 at 12:42 PM, Feng Tian <ftian@vitessedata.com> wrote:

> Just a quick anecdotal evidence. I did similar experiment about three years

> ago. The conclusion was that if you have SSD, just do quick sort and

> forget the longer runs, but if you are using hard drives, longer runs is the

> winner (and safer, to avoid cliffs). I did not experiment with RAID0/5 on

> many spindles though.

>

> Not limited to sort, more generally, SSD is different enough from HDD,

> therefore it may worth the effort for backend to "guess" what storage device

> it has, then choose the right thing to do.

The devil is in the details. I cannot really comment on such a general

statement.

I would be willing to believe that that's true under

unrealistic/unrepresentative conditions. Specifically, when multiple

passes are required with a sort-merge strategy where that isn't the

case with replacement selection. This could happen with a tiny

work_mem setting (tiny in an absolute sense more than a relative

sense). With an HDD, where sequential I/O is so much faster, this

could be enough to make replacement selection win, just as it would

have in the 1970s with magnetic tapes.

As I've said, the solution is to simply avoid multiple passes, which

should be possible in virtually all cases because of the quadratic

growth in a classic hybrid sort-merge strategy's capacity to avoid

multiple passes (growth relative to work_mem's growth). Once you

ensure that, then you probably have a mostly I/O bound workload, which

can be made faster by adding sequential I/O capacity (or, on the

Postgres internals side, adding asynchronous I/O, or with memory

prefetching). You cannot really buy a faster CPU to make a degenerate

heapsort faster.

--

Peter Geoghegan

Agree everything in principal,except one thing -- no, random IO on HDD in 2010s (relative to CPU/Memory/SSD), is not any faster than tape in 1970s. :-)

On Thu, Aug 20, 2015 at 1:28 PM, Feng Tian <ftian@vitessedata.com> wrote: > Agree everything in principal,except one thing -- no, random IO on HDD in > 2010s (relative to CPU/Memory/SSD), is not any faster than tape in 1970s. > :-) Sure. The advantage of replacement selection could be a deciding factor in unrepresentative cases, as I mentioned, but even then it's not going to be a dramatic difference as it would have been in the past. By the way, please don't top-post. -- Peter Geoghegan

On Thu, Aug 20, 2015 at 6:05 AM, Greg Stark <stark@mit.edu> wrote: > On Thu, Aug 20, 2015 at 3:24 AM, Peter Geoghegan <pg@heroku.com> wrote: >> I believe, in general, that we should consider a multi-pass sort to be >> a kind of inherently suspect thing these days, in the same way that >> checkpoints occurring 5 seconds apart are: not actually abnormal, but >> something that we should regard suspiciously. Can you really not >> afford enough work_mem to only do one pass? Does it really make sense >> to add far more I/O and CPU costs to avoid that other tiny memory >> capacity cost? > > I think this is the crux of the argument. And I think you're > basically, but not entirely, right. I agree that that's the crux of my argument. I disagree about my not being entirely right. :-) > The key metric there is not how cheap memory has gotten but rather > what the ratio is between the system's memory and disk storage. The > use case I think you're leaving out is the classic "data warehouse" > with huge disk arrays attached to a single host running massive > queries for hours. In that case reducing run size will reduce I/O > requirements directly and halving the amount of I/O sort takes will > halve the time it takes regardless of cpu efficiency. And I have a > suspicion typical data distributions get much better than a 2x > speedup. It could reduce seek time, which might be the dominant cost (but not I/O as such). I do accept that my argument did not really apply to this case, but you seem to be making an additional non-conflicting argument that certain data warehousing cases would be helped in another way by my patch. My argument was only about multi-gigabyte cases that I tested that were significantly improved, primarily due to CPU caching effects. If this helps with extremely large sorts that do require multiple passes by reducing seek time -- I think that they'd have to be multi-terabyte sorts, which I am ill-equipped to test -- then so much the better, I suppose. In any case, as I've said the way we allow run size to be dictated only by available memory (plus whatever replacement selection can do to make on-tape runs longer) is bogus. In the future there should be a cost model for an optimal run size, too. > But I think you're basically right that this is the wrong use case to > worry about for most users. Even those users that do have large batch > queries are probably not processing so much that they should be doing > multiple passes. The ones that do are are probably more interested in > parallel query, federated databases, column stores, and so on rather > than worrying about just how many hours it takes to sort their > multiple terabytes on a single processor. I suppose so. If you can afford multiple terabytes of storage, you can probably still afford gigabytes of memory to do a single pass. My laptop is almost 3 years old, weighs about 1.5 Kg, and has 16 GiB of memory. It's usually always that simple, and not really because we assume that Postgres doesn't have to deal with multi-terabyte sorts. Maybe I lack perspective, having never really dealt with a real data warehouse. I didn't mean to imply that in no circumstances could anyone profit from a multi-pass sort. If you're using Hadoop or something, I imagine that it still makes sense. In general, I think you'll agree that we should strongly leverage the fact that a multi-pass sort just isn't going to be needed when things are set up correctly under standard operating conditions nowadays. > I am quite suspicious of quicksort though. It has O(n^2) worst case > and I think it's only a matter of time before people start worrying > about DOS attacks from users able to influence the data ordering. It's > also not very suitable for GPU processing. Quicksort gets most of its > advantage from cache efficiency, it isn't a super efficient algorithm > otherwise, are there not other cache efficient algorithms to consider? I think that high quality quicksort implementations [1] will continue to be the way to go for sorting integers internally at the very least. Practically speaking, problems with the worst case performance have been completely ironed out since the early 1990s. I think it's possible to DOS Postgres by artificially introducing a worst-case, but it's very unlikely to be the easiest way of doing that in practice. I admit that it's probably the coolest way, though. I think that the benefits of offloading sorting to the GPU are not in evidence today. This may be especially true of a "street legal" implementation that takes into account all of the edge cases, as opposed to a hand customized thing for sorting uniformly distributed random integers. GPU sorts tend to use radix sort, and I just can't see that catching on. [1] https://www.cs.princeton.edu/~rs/talks/QuicksortIsOptimal.pdf -- Peter Geoghegan

On Thu, Aug 20, 2015 at 11:16 PM, Peter Geoghegan <pg@heroku.com> wrote: > It could reduce seek time, which might be the dominant cost (but not > I/O as such). No I didn't quite follow the argument to completion. Increasing the run size is a win if it reduces the number of passes. In the single-pass case it has to read all the data once, write it all out to tapes, then read it all back in again.So 3x the data. If it's still not sorted it needs to write it all back out yet again and read it all back in again. So 5x the data. If the tapes are larger it can avoid that 66% increase in total I/O. In large data sets it can need 3, 4, or maybe more passes through the data and saving one pass would be a smaller incremental difference. I haven't thought through the exponential growth carefully enough to tell if doubling the run size should decrease the number of passes linearly or by a constant number. But you're right that seems to be less and less a realistic scenario. Times when users are really processing data sets that large nowadays they'll just throw it into Hadoop or Biigquery or whatever to get the parallelism of many cpus. Or maybe Citus and the like. The main case where I expect people actually run into this is in building indexes, especially for larger data types (which come to think of it might be exactly where the comparison is expensive enough that quicksort's cache efficiency isn't helpful). But to do fair tests I would suggest you configure work_mem smaller (since running tests on multi-terabyte data sets is a pain) and sort some slower data types that don't fit in memory. Maybe arrays of text or json? -- greg

On Thu, Aug 20, 2015 at 5:02 PM, Greg Stark <stark@mit.edu> wrote: > I haven't thought through the exponential > growth carefully enough to tell if doubling the run size should > decrease the number of passes linearly or by a constant number. It seems that with 5 times the data that previously required ~30MB to avoid a multi-pass sort (where ~2300MB is required for an internal sort -- the benchmark query), it took ~60MB to avoid a multi-pass sort. I guess I just didn't exactly determine either threshold due to that taking too long, and that as predicted, every time the input size quadruples, the required amount of work_mem to avoid multiple passes only doubles. That will need to be verified more vigorously, but it looks that way. > But you're right that seems to be less and less a realistic scenario. > Times when users are really processing data sets that large nowadays > they'll just throw it into Hadoop or Biigquery or whatever to get the > parallelism of many cpus. Or maybe Citus and the like. I'm not sure that even that's generally true, simply because sorting a huge amount of data is very expensive -- it's not really a "big data" thing, so to speak. Look at recent results on this site: http://sortbenchmark.org Last year's winning "Gray" entrant, TritonSort, uses a huge parallel cluster of 186 machines, but only sorts 100TB. That's just over 500GB per node. Each node is a 32 core Intel Xeon EC2 instance with 244GB memory, and lots of SSDs. It seems like the point of the 100TB minimum rule in the "Gray" contest category is that that's practically impossible to fit entirely in memory (to avoid merging). Eventually, linearithmic growth becomes extremely painful, not matter how much processing power you have. It takes a while, though. -- Peter Geoghegan

On 20 August 2015 at 18:41, Peter Geoghegan <pg@heroku.com> wrote:

--

On Thu, Aug 20, 2015 at 8:15 AM, Simon Riggs <simon@2ndquadrant.com> wrote:

> On 20 August 2015 at 03:24, Peter Geoghegan <pg@heroku.com> wrote:

>>

>>

>> The patch is ~3.25x faster than master

>

>

> I've tried to read this post twice and both times my work_mem overflowed.

> ;-)

>

> Can you summarize what this patch does? I understand clearly what it doesn't

> do...

The most important thing that it does is always quicksort runs, that

are formed by simply filling work_mem with tuples in no particular

order, rather than trying to make runs that are twice as large as

work_mem on average. That's what the ~3.25x improvement concerned.

That's actually a significantly simpler algorithm than replacement

selection, and appears to be much faster.

Then I think this is fine, not least because it seems like a first step towards parallel sort.

This will give more runs, so merging those needs some thought. It will also give a more predictable number of runs, so we'll be able to predict any merging issues ahead of time. We can more easily find out the min/max tuple in each run, so we only merge overlapping runs.

You might even say that it's

a dumb algorithm, because it is less sophisticated than replacement

selection. However, replacement selection tends to use CPU caches very

poorly, while its traditional advantages have become dramatically less

important due to large main memory sizes in particular. Also, it hurts

that we don't currently dump tuples in batches, for several reasons.

Better to do memory intense operations in batch, rather than having a

huge inner loop, in order to minimize or prevent instruction cache

misses. And we can better take advantage of asynchronous I/O.

The complicated aspect of considering the patch is whether or not it's

okay to not use replacement selection anymore -- is that an

appropriate trade-off?

Using a heapsort is known to be poor for large heaps. We previously discussed the idea of quicksorting the first chunk of memory, then reallocating the heap as a smaller chunk for the rest of the sort. That would solve the cache miss problem.

I'd like to see some discussion of how we might integrate aggregation and sorting. A heap might work quite well for that, whereas quicksort doesn't sound like it would work as well.

The reason that the code has not actually been simplified by this

patch is that I still want to use replacement selection for one

specific case: when it is anticipated that a "quicksort with

spillover" can occur, which is only possible with incremental

spilling. That may avoid most I/O, by spilling just a few tuples using

a heap/priority queue, and quicksorting everything else. That's

compelling when you can manage it, but no reason to always use

replacement selection for the first run in the common case where there

well be several runs in total.

I think its premature to retire that algorithm - I think we should keep it for a while yet. I suspect it may serve well in cases where we have low memory, though I accept that is no longer the case for larger servers that we would now call typical.

This could cause particular issues in optimization, since heap sort is wonderfully predictable. We'd need a cost_sort() that was slightly pessimistic to cover the risk that a quicksort might not be as fast as we hope.

Is that any clearer?

Yes, thank you.

I'd like to see a more general and concise plan for how sorting evolves. We are close to having the infrastructure to perform intermediate aggregation, which would allow that to happen during sorting when required (aggregation, sort distinct). We also agreed some time back that parallel sorting would be the first incarnation of parallel operations, so we need to consider that also.

Simon Riggs http://www.2ndQuadrant.com/

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

On Thu, Aug 20, 2015 at 11:56 PM, Simon Riggs <simon@2ndquadrant.com> wrote: > This will give more runs, so merging those needs some thought. It will also > give a more predictable number of runs, so we'll be able to predict any > merging issues ahead of time. We can more easily find out the min/max tuple > in each run, so we only merge overlapping runs. I think that merging runs can be optimized to reduce the number of cache misses. Poul-Henning Kamp, the FreeBSD guy, has described problems with binary heaps and cache misses [1], and I think we could use his solution for merging. But we should definitely still quicksort runs. > Using a heapsort is known to be poor for large heaps. We previously > discussed the idea of quicksorting the first chunk of memory, then > reallocating the heap as a smaller chunk for the rest of the sort. That > would solve the cache miss problem. > > I'd like to see some discussion of how we might integrate aggregation and > sorting. A heap might work quite well for that, whereas quicksort doesn't > sound like it would work as well. If you're talking about deduplicating within tuplesort, then there are techniques. I don't know that that needs to be an up-front priority of this work. > I think its premature to retire that algorithm - I think we should keep it > for a while yet. I suspect it may serve well in cases where we have low > memory, though I accept that is no longer the case for larger servers that > we would now call typical. I have given one case where I think the first run should still use replacement selection: where that enables a "quicksort with spillover". For that reason, I would consider that I have not actually proposed to retire the algorithm. In principle, I agree with also using it under any other circumstances where it is likely to be appreciably faster, but it's just not in evidence that there is any other such case. I did look at all the traditionally sympathetic cases, as I went into, and it still seemed to not be worth it at all. But by all means, if you think I missed something, please show me a test case. > This could cause particular issues in optimization, since heap sort is > wonderfully predictable. We'd need a cost_sort() that was slightly > pessimistic to cover the risk that a quicksort might not be as fast as we > hope. Wonderfully predictable? Really? It's totally sensitive to CPU cache characteristics. I wouldn't say that at all. If you're alluding to the quicksort worst case, that seems like the wrong thing to worry about. The risk around that is often overstated, or based on experience from third-rate implementations that don't follow various widely accepted recommendations from the research community. > I'd like to see a more general and concise plan for how sorting evolves. We > are close to having the infrastructure to perform intermediate aggregation, > which would allow that to happen during sorting when required (aggregation, > sort distinct). We also agreed some time back that parallel sorting would be > the first incarnation of parallel operations, so we need to consider that > also. I agree with everything you say here, I think. I think it's appropriate that this work anticipate adding a number of other optimizations in the future, at least including: * Parallel sort using worker processes. * Memory prefetching. * Offset-value coding of runs, a compression technique that was used in System R, IIRC. This can speed up merging a lot, and will save I/O bandwidth on dumping out runs. * Asynchronous I/O. There should be an integrated approach to applying every possible optimization, or at least leaving the possibility open. A lot of these techniques are complementary. For example, there are significant benefits where the "onlyKey" optimization is now used with external sorts, which you get for free by using quicksort for runs. In short, I am absolutely on-board with the idea that these things need to be anticipated at the very least. For another speculative example, offset coding makes the merge step cheaper, but the work of doing the offset coding can be offloaded to worker processes, whereas the merge step proper cannot really be effectively parallelized -- those two techniques together are greater than the sum of their parts. One big problem that I see with replacement selection is that it makes most of these things impossible. In general, I think that parallel sort should be an external sort technique first and foremost. If you can only parallelize an internal sort, then running out of road when there isn't enough memory to do the sort in memory becomes a serious issue. Besides, you need to partition the input anyway, and external sorting naturally needs to do that while not precluding runs not actually being dumped to disk. [1] http://queue.acm.org/detail.cfm?id=1814327 -- Peter Geoghegan

On Wed, Aug 19, 2015 at 7:24 PM, Peter Geoghegan <pg@heroku.com> wrote: > Let's start by comparing an external sort that uses 1/3 the memory of > an internal sort against the master branch. That's completely unfair > on the patch, of course, but it is a useful indicator of how well > external sorts do overall. Although an external sort surely cannot be > as fast as an internal sort, it might be able to approach an internal > sort's speed when there is plenty of I/O bandwidth. That's a good > thing to aim for, I think. > The patch only takes ~10% more time to execute this query, which seems > very good considering that ~1/3 the work_mem has been put to use. > Note that the on-tape runs are small relative to CPU costs, so this > query is a bit sympathetic (consider the time spent writing batches > that trace_sort indicates here). CREATE INDEX would not compare so > well with an internal sort, for example, especially if it was a > composite index or something. This is something that I've made great progress on (see "concrete example" below for numbers). The differences in the amount of I/O required between these two cases (due to per-case variability in the width of tuples written to tape for datum sorts and index sorts) did not significantly factor in to the differences in performance, it turns out. The big issue was that while a pass-by-value datum sort accidentally has good cache characteristics during the merge step, that is not generally true. I figured out a way of making it generally true, though. I attach a revised patch series with a new commit that adds an optimization to the merge step, relieving what was a big remaining bottleneck in the CREATE INDEX case (and *every* external sort case that isn't a pass-by-value datum sort, which is most things). There are a few tweaks to earlier commits including, but nothing very interesting. All of my benchmarks suggests that this most recent revision puts external sorting within a fairly small margin of a fully internal sort on the master branch in many common cases. This difference is seen when the implementation only makes use of a fraction of the memory required for an internal sort, provided the system is reasonably well balanced. For a single backend, there is an overhead of about 5% - 20% against master's internal sort performance. This speedup appears to be fairly robust across a variety of different cases. I particularly care about CREATE INDEX, since that is where most pain is felt in the real world, and I'm happy that I found a way to make CREATE INDEX external sort reasonably comparable in run time to internal sorts that consume much more memory. I think it's time to stop talking about this as performance work, and start talking about it as scalability work. With that in mind, I'm mostly going to compare the performance of the new, optimized external sort implementation with the existing internal sort implementation from now on. New patch -- Sequential memory access =============================== The trick I hit upon for relieving the merge bottleneck was fairly simple. Prefetching works for internal sorts, but isn't practical for external sorts while merging. OTOH, I can arrange to have runs allocate their "tuple proper" contents into a memory pool, partitioned by final on-the-fly tape number. Today, runs/tapes are slurped from disk sequentially in a staggered fashion, based on the availability of in-memory tuples from each tape while merging. The new patch is very effective in reducing cache misses by simply making sure that each tape's "tuple proper" (e.g. each IndexTuple) is accessed in memory in the natural, predictable order (the sorted order that runs on tape always have). Unlike with internal sorts (where explicit memory prefetching of each "tuple proper" may be advisable), the final order in which the caller must consume a tape's "tuple proper" is predictable well in advance. A little rearrangement is required to make what were previously retail palloc() calls during prereading (a palloc() for each "tuple proper", within each READTUP() routine) consume space from the memory pool instead. The pool (a big, once-off memory allocation) is reused in a circular fashion per tape partition. This saves a lot of palloc() overhead. Under this scheme, each tape's next few IndexTuples are all in one cacheline. This patch has the merge step make better use of available memory bandwidth, rather than attempting to conceal memory latency. Explicit prefetch instructions (that we may independently end up using to do something similar with internal sorts when fetching tuples following sorting proper) are all about hiding latency. Concrete example -- performance --------------------------------------------- I attach a text file describing a practical, reproducible example CREATE INDEX. It shows how CREATE INDEX now compares fairly well with an equivalent operation that has enough maintenance_work_mem to complete its sort internally. I'll just summarize it here: A CREATE INDEX on a single int4 attribute on an unlogged table takes only ~18% longer. This is a 100 million row table that is 4977 MB on disk. On master, CREATE INDEX takes 66.6 seconds in total with an *internal* sort. With the patch series applied, an *external* sort involving a final on-the-fly merge of 6 runs takes 78.5 seconds. Obviously, since there are 6 runs to merge, work_mem is only approximately 1/6 of what is required for a fully internal sort. High watermark memory usage ------------------------------------------ One concern about the patch may be that it increases the high watermark memory usage by any on-the-fly final merge step. It takes full advantage of the availMem allowance at a point where every "tuple proper" is freed, and availMem has only had SortTuple/memtuples array "slot" memory subtracted (plus overhead). Memory is allocated in bulk once, and partitioned among active tapes, with no particular effort towards limiting memory usage beyond enforcing that we always !LACKMEM(). A lot of the overhead of many retail palloc() calls is removed by simply using one big memory allocation. In practice, LACKMEM() will rarely become true, because the availability of slots now tends to be the limiting factor. This is partially explained by the number of slots being established when palloc() overhead was in play, prior to the final merge step. However, I have concerns about the memory usage of this new approach. With the int4 CREATE INDEX case above, which has a uniform distribution, I noticed that about 40% of each tape's memory space remains unused when slots are exhausted. Ideally, we'd only have allocated enough memory to run out at about the same time that slots are exhausted, since the two would be balanced. This might be possible for fixed-sized tuples. I have not allocated each final on-the-fly merge step's active tape's pool individually, because while this waste of memory is large enough to be annoying, it's not large enough to be significantly helped by managing a bunch of per-tape buffers and enlarging them as needed geometrically (e.g. starting small, and doubling each time the buffer size is hit until the per-tape limit is finally reached). The main reason that the high watermark is increased is not because of this, though. It's mostly just that "tuple proper" memory is not freed until the sort is done, whereas before there were many small pfree() calls to match the many palloc() calls -- calls that occurred early and often. Note that the availability of "slots" (i.e. the size of the memtuples array, minus one element for each tape's heap item) is currently determined by whatever size it happened to be at when memtuples stopped growing, which isn't particularly well principled (hopefully this is no worse now). Optimal memory usage ------------------------------- In the absence of any clear thing to care about most beyond making sorting faster while still enforcing !LACKMEM(), for now I've kept it simple. I am saving a lot of memory by clawing back palloc() overhead, but may be wasting more than that in another way now, to say nothing of the new high watermark itself. If we're entirely I/O bound, maybe we should not waste memory by simply not allocating as much anyway (i.e. the extra memory may only theoretically help even when it is written to). But what does it really mean to be I/O bound? The OS cache probably consumes plenty of memory, too. Finally, let us not forget that it's clearly still the case that even following this work, run size needs to be optimized using a cost model, rather than simply being determined by how much memory can be made available (work_mem). If we get a faster sort using far less work_mem, then the DBA is probably accidentally wasting huge amounts of memory due to failing to do that. As an implementor, it's really hard to balance all of these concerns, or to say that one in particular is most urgent. Parallel sorting =========== Simon rightly emphasized the need for joined-up thinking in relation to applying important tuplesort optimizations. We must at least consider parallelism as part of this work. I'm glad that the first consumer of parallel infrastructure is set to be parallel sequential scans, not internal parallel sorts. That's because it seems that overall, a significant cost is actually reading tuples into memtuples to sort -- heap scanning and related costs in the buffer manager (even assuming everything is in shared_buffers), COPYTUP() palloc() calls, and so on. Taken together, they can be a bigger overall cost than sorting proper, even assuming abbreviated keys are not used. The third bucket that I tend to categorize costs into, "time spent actually writing out finished runs", is small on a well balanced system. Surprisingly small, I would say. I will sketch a simple implementation of parallel sorting based on the patch series that may be workable, and requires relatively little implementation effort compare to other ideas that were raised at various times: * Establish an optimal run size ahead of time using a cost model. We need this for serial external sorts anyway, to relieve the DBA of having to worry about sizing maintenance_work_mem according to obscure considerations around cache efficiency within tuplesort. Parallelism probably doesn't add much complexity to the cost model, which is not especially complicated to begin with. Note that I have not added this cost model yet (just the ad-hoc, tuplesort-private cost model for using replacement selection to get a "quicksort with spillover"). It may be best if this cost model lives in the optimizer. * Have parallel workers do a parallel heap scan of the relation until they fill this optimal run size. Use local memory to sort within workers. Write runs out in the usual way. Then, the worker picks up the next run scheduled. If there are no more runs to build, there is no more work for the parallel workers. * Shut down workers. Do an on-the-fly merge in the parent process. This is the same as with a serial merge, but with a little coordination with worker processes to make sure every run is available, etc. In general, coordination is kept to an absolute minimum. I tend to think that this really simple approach would get much of the gain of something more complicated -- no need to write shared memory management code, minimal need to handle coordination between workers, and no real changes to the algorithms used for each sub-problem. This makes merging more of a bottleneck again, but that is a bottleneck on I/O and especially memory bandwidth. Parallelism cannot help much with that anyway (except by compressing runs with offset coding, perhaps, but that isn't specific to parallelism and won't always help). Writing out runs in bulk is very fast here -- certainly much faster than I thought it would be when I started thinking about external sorting. And if that turns out to be a problem for cases that have sufficient memory to do everything internally, that can later be worked on non-invasively. As I've said in the past, I think parallel sorting only makes sense when memory latency and bandwidth are not huge bottlenecks, which we should bend over backwards to avoid. In a sense, you can't really make use of parallel workers for sorting until you fix that problem first. I am not suggesting that we do this because it's easier than other approaches. I think it's actually most effective to not make parallel sorting too divergent from serial sorting, because making things cumulative makes speed-ups from localized optimizations cumulative, while at the same time, AFAICT there isn't anything to recommend extensive specialization for parallel sort. If what I've sketched is also a significantly easier approach, then that's a bonus. -- Peter Geoghegan

Вложения

- quicksort_external_test.txt

- 0005-Use-tuple-proper-memory-pool-in-tuplesort.patch

- 0004-Prefetch-from-memtuples-array-in-tuplesort.patch

- 0003-Log-requirement-for-multiple-external-sort-passes.patch

- 0002-Further-diminish-role-of-replacement-selection.patch

- 0001-Quicksort-when-performing-external-sorts.patch

>I will sketch a simple implementation of parallel sorting based on the >patch series that may be workable, and requires relatively little >implementation effort compare to other ideas that were raised at >various times: Hello, I've only a very superficial understanding on your work, please apologize if this is off topic or if this was already discussed... Have you considered performances for cases where multiple CREATE INDEX are running in parallel? One of our typical use case are large daily tables (50-300 Mio rows) with up to 6 index creations that start simultaneously. Our servers have 40-60 GB RAM , ca. 12 CPUs and we set maintenance mem to 1-2 GB for this. If the create index themselves start using parallelism, I guess that we might need to review our workflow... best regards, Marc Mamin

On Sun, Sep 6, 2015 at 1:51 AM, Marc Mamin <M.Mamin@intershop.de> wrote: > Have you considered performances for cases where multiple CREATE INDEX are running in parallel? > One of our typical use case are large daily tables (50-300 Mio rows) with up to 6 index creations > that start simultaneously. > Our servers have 40-60 GB RAM , ca. 12 CPUs and we set maintenance mem to 1-2 GB for this. > If the create index themselves start using parallelism, I guess that we might need to review our workflow... Not particularly. I imagine that that case would be helped a lot here (probably more than a simpler case involving only one CREATE INDEX), because each core would be require fewer main memory accesses overall. Maybe you can test it and let us know how it goes. -- Peter Geoghegan

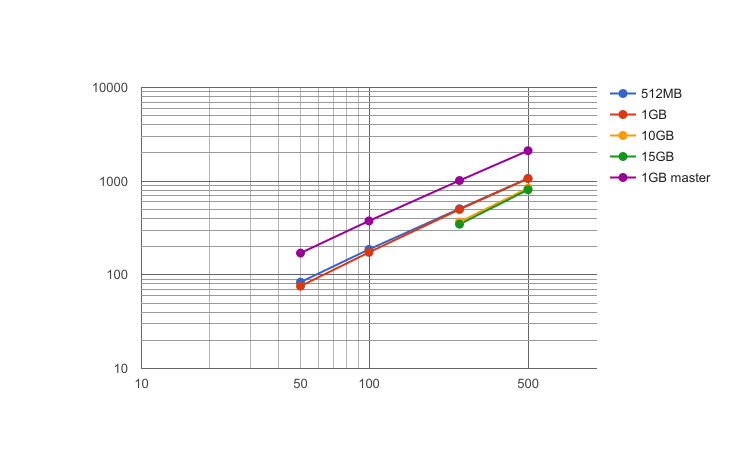

On Wed, Aug 19, 2015 at 7:24 PM, Peter Geoghegan <pg@heroku.com> wrote: > I'll start a new thread for this, since my external sorting patch has > now evolved well past the original "quicksort with spillover" > idea...although not quite how I anticipated it would. It seems like > I've reached a good point to get some feedback. Corey Huinker has once again assisted me with this work, by doing some benchmarking on an AWS instance of his: 32 cores (c3.8xlarge, I suppose) MemTotal: 251902912 kB I believe it had one EBS volume. This testing included 2 data sets: * A data set that he happens to have that is representative of his production use-case. Corey had some complaints about the sort performance of PostgreSQL, particularly prior to 9.5, and I like to link any particular performance optimization to an improvement in an actual production workload, if at all possible. * A tool that I wrote, that works on top of sortbenchmark.org's "gensort" [1] data generation tool. It seems reasonable to me to drive this work in part with a benchmark devised by Jim Gray. He did after all receive a Turing award for this contribution to transaction processing. I'm certainly a fan of his work. A key practical advantage of that is that is has reasonable guarantees about determinism, making these results relatively easy to recreate independently. The modified "gensort" is available from https://github.com/petergeoghegan/gensort The python script postgres_load.py, which performs bulk-loading for Postgres using COPY FREEZE. It ought to be fairly self-documenting: $:~/gensort$ ./postgres_load.py --help usage: postgres_load.py [-h] [-w WORKERS] [-m MILLION] [-s] [-l] [-c] optional arguments: -h, --help show this help message and exit -w WORKERS, --workers WORKERS Number of gensort workers (default: 4) -m MILLION, --million MILLION Generate n million tuples(default: 100) -s, --skew Skew distribution of output keys (default: False) -l, --logged Use loggedPostgreSQL table (default: False) -c, --collate Use default collation rather than C collation (default: False) For this initial report to the list, I'm going to focus on a case involving 16 billion non-skewed tuples generated using the gensort tool. I wanted to see how a sort of a ~1TB table (1017GB as reported by psql, actually) could be improved, as compared to relatively small volumes of data (in the multiple gigabyte range) that were so improved by sorts on my laptop, which has enough memory to avoid blocking on physical I/O much of the time. How the new approach deals with hundreds of runs that are actually reasonably sized is also of interest. This server does have a lot of memory, and many CPU cores. It was kind of underpowered on I/O, though. The initial load of 16 billion tuples (with a sortkey that is "C" locale text) took about 10 hours. My tool supports parallel generation of COPY format files, but serial performance of that stage isn't especially fast. Further, in order to support COPY FREEZE, and in order to ensure perfect determinism, the COPY operations occur serially in a single transaction that creates the table that we performed a CREATE INDEX on. Patch, with 3GB maintenance_work_mem: ... LOG: performsort done (except 411-way final merge): CPU 1017.95s/17615.74u sec elapsed 23910.99 sec STATEMENT: create index on sort_test (sortkey ); LOG: external sort ended, 54740802 disk blocks used: CPU 2001.81s/31395.96u sec elapsed 41648.05 sec STATEMENT: create index on sort_test (sortkey ); So just over 11 hours (11:34:08), then. The initial sorting for 411 runs took 06:38:30.99, as you can see. Master branch: ... LOG: finished writing run 202 to tape 201: CPU 1224.68s/31060.15u sec elapsed 34409.16 sec LOG: finished writing run 203 to tape 202: CPU 1230.48s/31213.55u sec elapsed 34580.41 sec LOG: finished writing run 204 to tape 203: CPU 1236.74s/31366.63u sec elapsed 34750.28 sec LOG: performsort starting: CPU 1241.70s/31501.61u sec elapsed 34898.63 sec LOG: finished writing run 205 to tape 204: CPU 1242.19s/31516.52u sec elapsed 34914.17 sec LOG: finished writing final run 206 to tape 205: CPU 1243.23s/31564.23u sec elapsed 34963.03 sec LOG: performsort done (except 206-way final merge): CPU 1243.86s/31570.58u sec elapsed 34974.08 sec LOG: external sort ended, 54740731 disk blocks used: CPU 2026.98s/48448.13u sec elapsed 55299.24 sec CREATE INDEX Time: 55299315.220 ms So 15:21:39 for master -- it's much improved, but this was still disappointing given the huge improvements on relatively small cases. Finished index was fairly large, which can be seen here by working back from "total relation size": postgres=# select pg_size_pretty(pg_total_relation_size('sort_test'));pg_size_pretty ----------------1487 GB (1 row) I think that this is probably due to the relatively slow I/O on this server, and because the merge step is more of a bottleneck. As we increase maintenance_work_mem, we're likely to then suffer from the lack of explicit asynchronous I/O here. It helps, still, but not dramatically. With with maintenance_work_mem = 30GB, patch is somewhat faster (no reason to think that this would help master at all, so that was untested): ... LOG: starting quicksort of run 40: CPU 1815.99s/19339.80u sec elapsed 24910.38 sec LOG: finished quicksorting run 40: CPU 1820.09s/19565.94u sec elapsed 25140.69 sec LOG: finished writing run 40 to tape 39: CPU 1833.76s/19642.11u sec elapsed 25234.44 sec LOG: performsort starting: CPU 1849.46s/19803.28u sec elapsed 25499.98 sec LOG: starting quicksort of run 41: CPU 1849.46s/19803.28u sec elapsed 25499.98 sec LOG: finished quicksorting run 41: CPU 1852.37s/20000.73u sec elapsed 25700.43 sec LOG: finished writing run 41 to tape 40: CPU 1864.89s/20069.09u sec elapsed 25782.93 sec LOG: performsort done (except 41-way final merge): CPU 1965.43s/20086.28u sec elapsed 25980.80 sec LOG: external sort ended, 54740909 disk blocks used: CPU 3270.57s/31595.37u sec elapsed 40376.43 sec CREATE INDEX Time: 40383174.977 ms So that takes 11:13:03 in total -- we only managed to shave about 20 minutes off the total time taken, despite a 10x increase in maintenance_work_mem. Still, at least it gets moderately better, not worse, which is certainly what I'd expect from the master branch. 60GB was half way between 3GB and 30GB in terms of performance, so it doesn't continue to help, but, again, at least things don't get much worse. Thoughts on these results: * I'd really like to know the role of I/O here. Better, low-overhead instrumentation is required to see when and how we are I/O bound. I've been doing much of that on a more-or-less ad hoc basis so far, using iotop. I'm looking into a way to usefully graph the I/O activity over many hours, to correlate with the trace_sort output that I'll also show. I'm open to suggestions on the easiest way of doing that. Having used the "perf" tool for instrumenting I/O at all in the past. * Parallelism would probably help us here *a lot*. * As I said, I think we suffer from the lack of asynchronous I/O much more at this scale. Will need to confirm that theory. * It seems kind of ill-advised to make run size (which is always in linear proportion to maintenance_work_mem with this new approach to sorting) larger, because it probably will hurt writing runs more than it will help in making merging cheaper (perhaps mostly due to the lack of asynchronous I/O to hide the latency of writes -- Linux might not do so well at this scale). * Maybe adding actual I/O bandwidth is the way to go to get a better picture. I wouldn't be surprised if we were very bottlenecked on I/O here. Might be worth using many parallel EBS volumes here, for example. [1] http://sortbenchmark.org/FAQ-2015.html -- Peter Geoghegan